If there's one thing that will make even the most powerful computer feel like a 7 year old rig, it's Adobe Lightroom paired with RAW files from any high-megapixel camera.

In my case, I spent over a year of spare time editing 848GB worth of 11,000+ 42-megapixel RAW photos and 4K videos from my New Zealand trip and making these nine photosets. I quickly realized that my two year old iMac was not up to the challenge.

In 2015 I took a stab at solving my photo storage problem with a cloud-backed 12TB Synology NAS. That setup is still running great. Now I just need to keep up with the performance requirements of having the latest camera gear with absurd file sizes.

I decided it was time to upgrade to something a bit more powerful. This time I decided to build a PC and switch to Windows 10 for my heavy computing tasks. Yes, I switched to Windows.

Update

June 2019

This post is about 1.5 years old now. While the vast majority of it is still relevant and accurate, one main thing has changed. I built a new, smaller PC to replace the one outlined in this article.

Everything in this article related to how I use Lightroom and how I built the PC remains accurate, so please read the rest of the post after you read this update, and feel free to follow and ask me questions on Twitter.

The updated PC setup in my NYC apartment.

Still a better choice than a Mac?

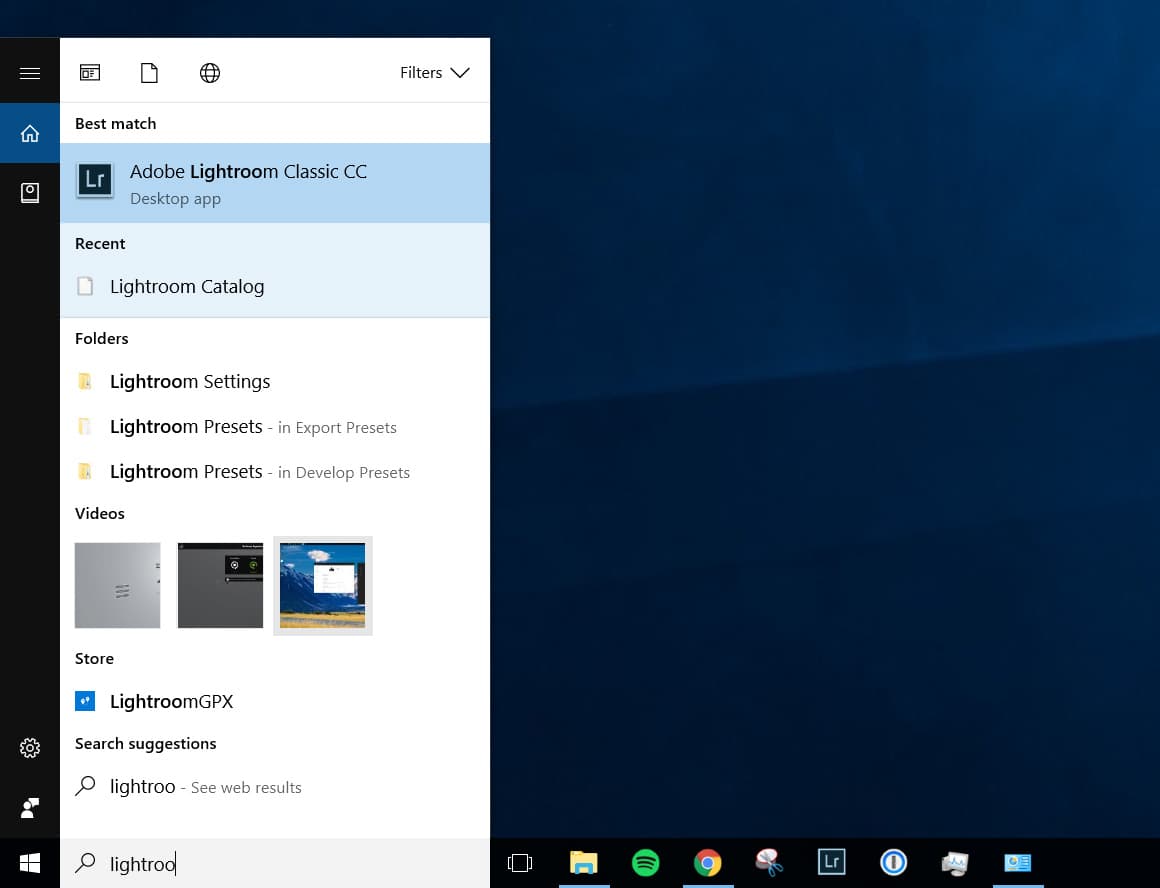

A custom PC for my photo-editing and development is definitely still the right choice for me.

When I wrote this post, the new Apple Mac Pro was purely rumor and had not been announced. Only the iMac Pro, which has the same problem iMacs have always had: they don't come with the most bleeding edge high-end components and after you purchase it you can't ever upgrade it as easily as you can swap out a graphics card or motherboard and CPU in a PC to jump to the next generation.

Then at WWDC 2019, Apple announced the new Mac Pro. And while it is a beast of a machine with custom graphics modules and can be configured with up to 28 cores and 1.5TB of RAM and can be paired along with an amazing 6K 32-inch Pro Display XDR, it is decidedly for actual creative professionals. Ones that can justify the minimum business expense of at least $11,000 for a base model Mac Pro and display. Not prosumers or advanced hobbyists like I might categorize myself.

It's exceedingly unlikely that the Mac Pro will find its way into many households, but rather fit in at creative agencies that will put it to work for advanced video editing and rendering.

So that leaves us where we started last year when I wrote this article: building a custom Windows 10 PC is a fantastic option for those desiring more power and the ability to continually and easily update the performance and capabilities of their computer as they see fit.

If anything, the Mac Pro news is perfect time for you, the casual prosumer hobbyist photographer/videographer/gamer, to consider building your own PC. Haven't yet updated my Lightroom PC post, but I recently built a tiny i9 9900K + RTX 2080 Ti PC https://t.co/FGRUr7O8Tg pic.twitter.com/gEi0agfWRp

— Paul Stamatiou 📷 (@Stammy) June 4, 2019

Why did you build a new PC?

In February 2019 I said goodbye to San Francisco—my home for almost 9 years—and moved to New York. In preparation for the big cross-country move, I wanted to slim down some of my possessions. I fully expected I would end up in a smaller apartment in New York than what I had in San Francisco.

My large desktop computer tower was my first target. (I also downsized to a smaller NAS storage device). This time I knew I wanted to go for a much smaller SFF (Small Form Factor) mini-ITX build, but something that wouldn't compromise on performance too much, or at all.

I was excited to build another SFF PC. Long ago I became acquainted with Shuttle XPC brand barebone SFF PCs and had built three over the years, including an AMD64 system running Gentoo Linux with a minimal window manager I loved at the time called fluxbox that I took to college my freshman year.

Not much has changed in the Intel landscape since my last build. They're still based on a 14nm process but Intel now has 8 core chips based on Coffee Lake using a newer Z390 chipset. The other noticable change has been Nvidia's release of their RTX line of graphics cards. Although AMD has some interesting stuff coming out soon with their 7nm 16-core Ryzen 9 3950X chip, Lightroom is still historically most performant with Intel processors (but I am eager to see how it performs once they get released) and has diminishing returns with more than 8 cores nowadays except for certain tasks (exporting) which aren't a priority for me.

The other thing that has changed in the last year and a half is that Adobe Lightroom Classic CC—since renamed to just Lightroom Classic—has received a steady stream of updates addressing performance. While it has not been a massive difference, Lightroom Classic does seem to be markedly improved at using additional cores, especially with respect to the Develop module where I spend most of my time in Lightroom.

2019 PC build parts

When I began to think about what I would put in this PC, I knew I did not want to compromise on the CPU or GPU. I picked the 8-core Intel i9 9900K and Nvidia RTX 2080 Ti graphics card. It took a while to find the RTX 2080 Ti in stock at the time I was looking for it, and in a 2-slot version that would fit in a small form factor case.

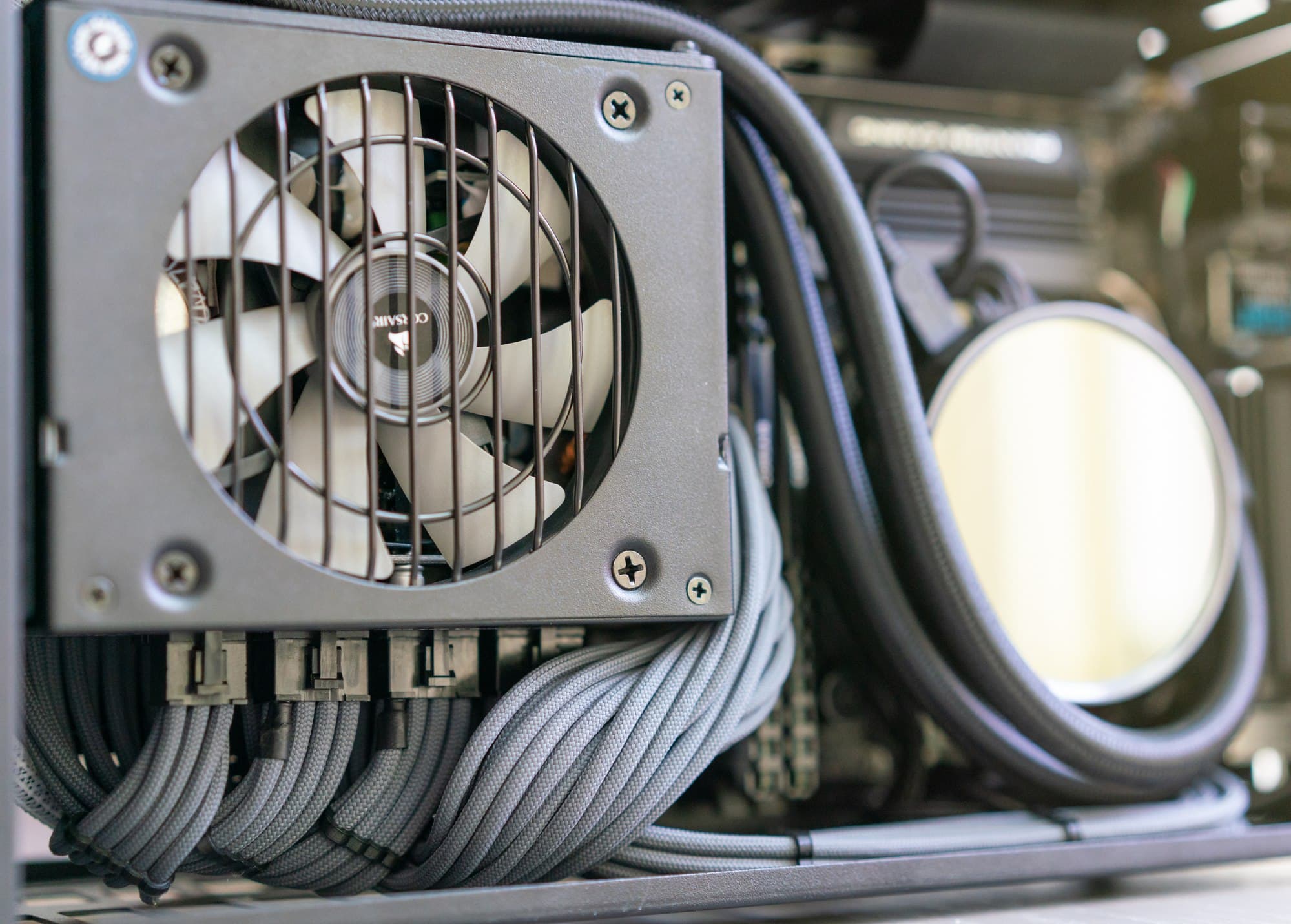

For the power supply I knew I would be getting the Corsair SF600 Platinum SFX form factor power supply that had just been released, following great reviews of the SF600 Gold version. (As I write this now, Corsair now sells a SF750 Platinum model). There were only a few models of mini-ITX Z390 chipset motherboards out at the time so I went with an ASRock model that seemed to have good-sized heatsinks on the PWMs and VRMs, but didn't have a massive shroud that could interfere with whatever cooling or heatsink I wound up putting on the CPU.

For storage I had been very happy with the Samsung 960 Evos in my last build, so I went with a larger 2TB 970 Evo for this computer. Then I took one of the two 1TB Samsung 960 Evos from my last build for a combined 3TB of storage. When it came to picking RAM I was concerned about the height of RAM sticks and how they might interfere with various cooling options for the CPU, so I went with low-profile RAM from Corsair that was made for confined spaces and didn't have overly tall heatsinks.

At this point I was certain I was going to build this computer into the Dan Case A4-SFX that I had already purchased over a year ago and had lying around. I began researching air cooling options for my 9900K. And that's when it became clear how tight the dimensions for this case were. There were only a few common air cooling options and none felt like they could handle the 95W TDP of the 9900K; and definitely not overclocked and definitely not quietly. Another popular option for that case was using a single fan radiator AIO watercooler. However, that would make for an extremely cramped build (some folks would even use an external power supply!) and one that still wouldn't handle cooling the 9900K that well.

It seemed like I was pushed in a corner and began considering sticking with my 6-core 8700K or choosing some other processor that would generate less heat. Instead, I decided to sell the A4-SFX case and get something a bit larger that could accomodate my hardware needs but still be considerably more compact that my previous desktop tower.

I went with the lovely Louqe Ghost S1.

The Louqe Ghost S1 is a tad larger than the A4-SFX case (7.2L vs the S1's 8.2L) but features a really interesting chassis design that allows for not only the sides of the case, but also the top to be removed. Then, if you so choose, you can add a "tophat" that extends the case to provide a bit more extensibility for whatever you need: be it more space for hard drives or added room for fans or other cooling solutions.

The downside of adding a tophat is that it does of course make the case taller and a bit less aesthetically appealing. I initially considered simply air cooling the 9900K in the Ghost S1. Despite the confined space, there are considerably more air cooling options for the Ghost S1, and they've been put to the test by Louqe themselves.

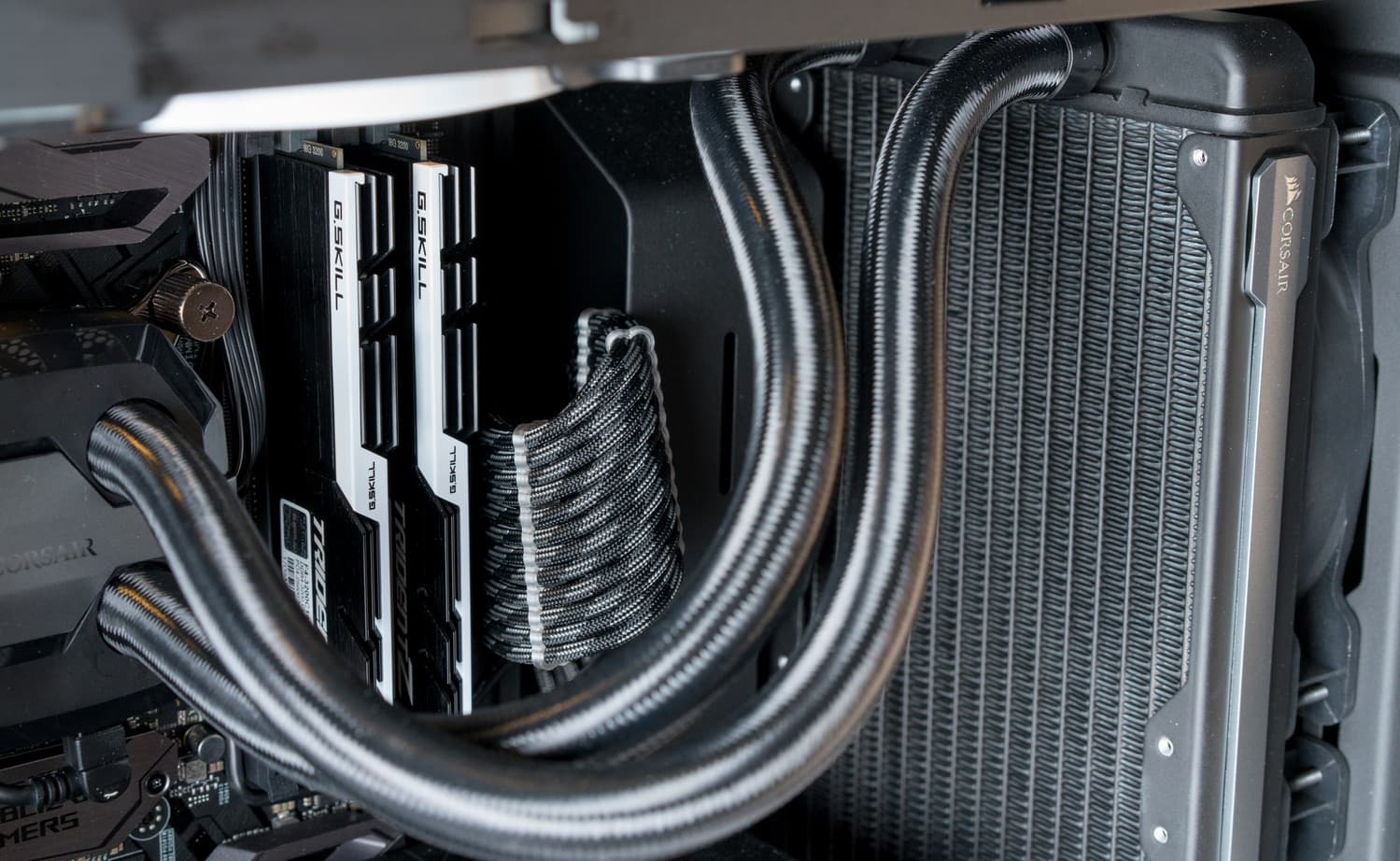

But I still thought that air cooling would not leave me with much headroom to overclock if I wanted to on the 9900K and may be louder as well. I went forward with a large tophat and installed the Kraken X52 AIO watercooler with a 240mm radiator.

Here's the complete parts list for this build:

- Intel Core i9 9900K CPU, DELIDDED

- NZXT Kraken X52 AIO liquid CPU cooler CPU cooler

- EVGA Nvidia RTX 2080 Ti XC Black Graphics card

- ASRock Z390 Phantom Gaming ITX/ac Motherboard

- 2x16GB Corsair Vengeance LPX DDR4-3000 CL15 LOW-PROFILE RAM

- 2TB Samsung 970 EVO M.2 SSD Storage

- 1TB Samsung 960 EVO M.2 SSD Storage (TAKEN FROM LATE 2017 BUILD)

- Corsair SF600 Platinum PSU

- Louqe Ghost S1 (MkI) with large tophat Case

- Custom paracord sleeved cables PSU cables

Completed build

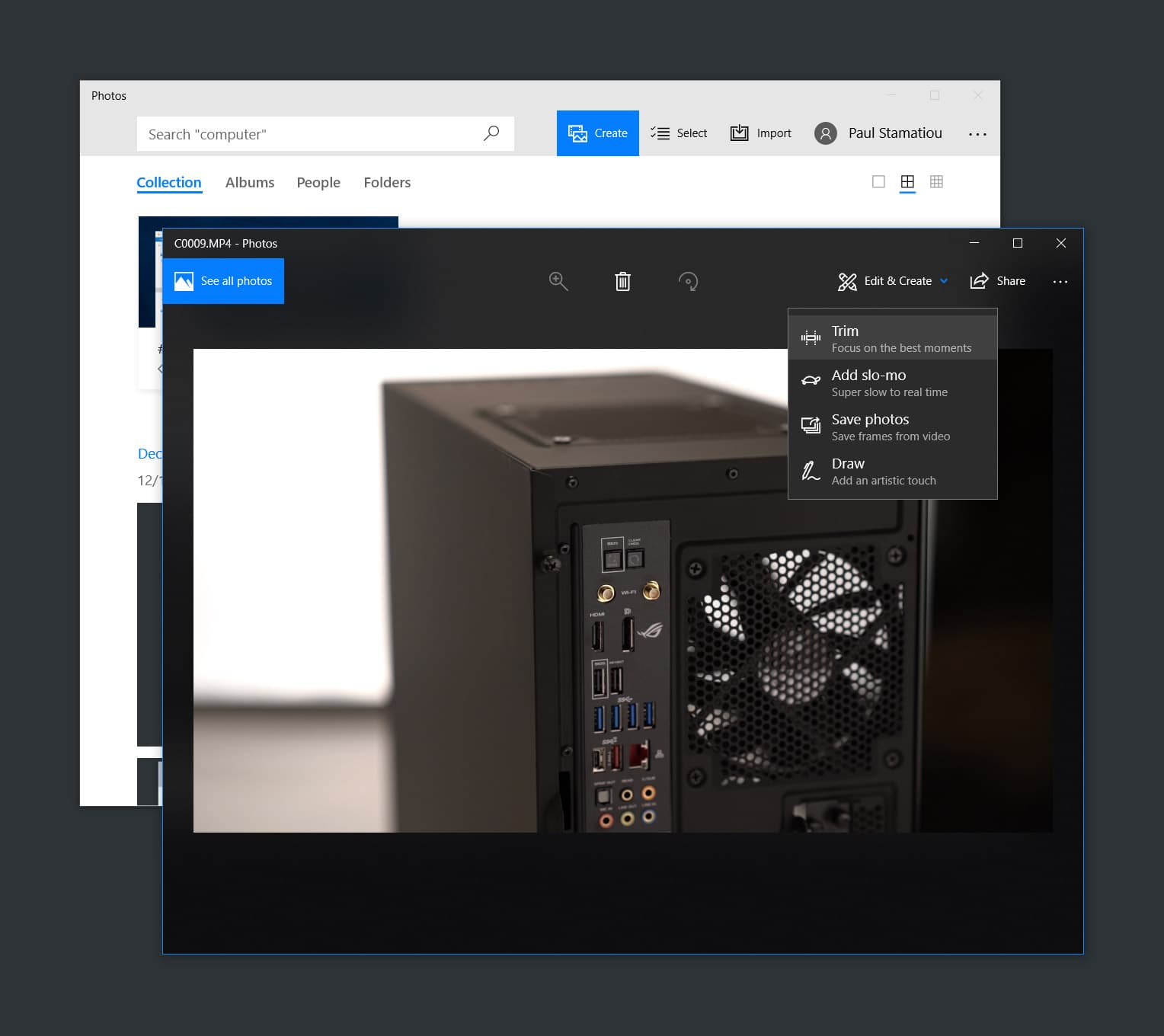

I ordered all the parts between December 2018 and January 2019 and was eager to build the new PC right away. Unfortunately, I was about to move and decided it would be safer to have the components shipped instead of the assembled computer, so I had to hold off until I finished moving to New York. Finally, about 2 months after I had packed everything, I was able to build the computer:

Okay got it functionally built today. But jeez it needs custom short cables for everything. So cramped. (Case panels and top shroud hat not attached) Watercooled delidded i7 9900k RTX 2080 Ti 2TB 970 Evo ssd 1TB 960 Evo ssd 32GB ram pic.twitter.com/DVw7O1adoV

— Paul Stamatiou 📷 (@Stammy) March 10, 2019

After the initial build I quickly realized how badly this build needed custom length modular PSU cables. The default Corsair cables were way too long for the confined space, leading to stuffing them anywhere I could to get them out of the way. It looked horrible and probably wasn't great for case airflow either.

After some research it quickly became apparent that the best option for custom cables was going to be from pslate customs. The cables are customized not only to the case but also the motherboard and graphics card to ensure optimal length, but also orientation: even in an individual cable, some wires are shorter than others to help promote a natural cable bend. It's pricey but the cables were great and aesthetically and functionally added quite a lot to the completed build.

Overall impressions

First off, I absolutely love the size of this case, even with the additional tophat. It's small enough to go on top of my desk and not be a bother.

Second, this computer is so quiet! Most of the time the PSU fan is completely off (a feature of the Corsair PSU for low loads) as well as the graphics card fans, leaving just the two large radiator fans spinning slowly (and the pump which I can't hear). It's noticeably quieter than my previous build. Of course, when dealing with a larger load the fans do ramp up, but the majority of the time it's whisper quiet.

Performance

I purchased the binned CPU (4.9GHz on all 8 cores at ~1.287V) from Silicon Lottery and had them delid it as well, so that's definitely contributing to my quiet setup and reasonable temps for the 9900K. By default the Intel i9 9900K has a base frequency of 3.6GHz, with a Turbo Boost ranging from 5GHz for 1-2 cores to 4.7GHz for all 8 cores. (I talk more about how Turbo Boost works later in this article).

While I'm still tinkering with my ideal overclocking settings, I'm running at around 4.9GHz stable on all 8 cores. I'll update this post when I have more info on my settings and idle/load temperatures.

Of course, my build is already slightly outdated as Intel just announced the i9 9900KS; an incremental update to the 9900K allowing for single-core and multi-core loads to ramp up to 5GHz, with a base frequency of 4GHz.

I no longer have my previous desktop computer with me (it now lives at home with my parents in Texas) so I couldn't run any side by side Lightroom benchmarks like I did with the last build on the latest version of Lightroom, so you'll have to trust me that this build is faster. :-)

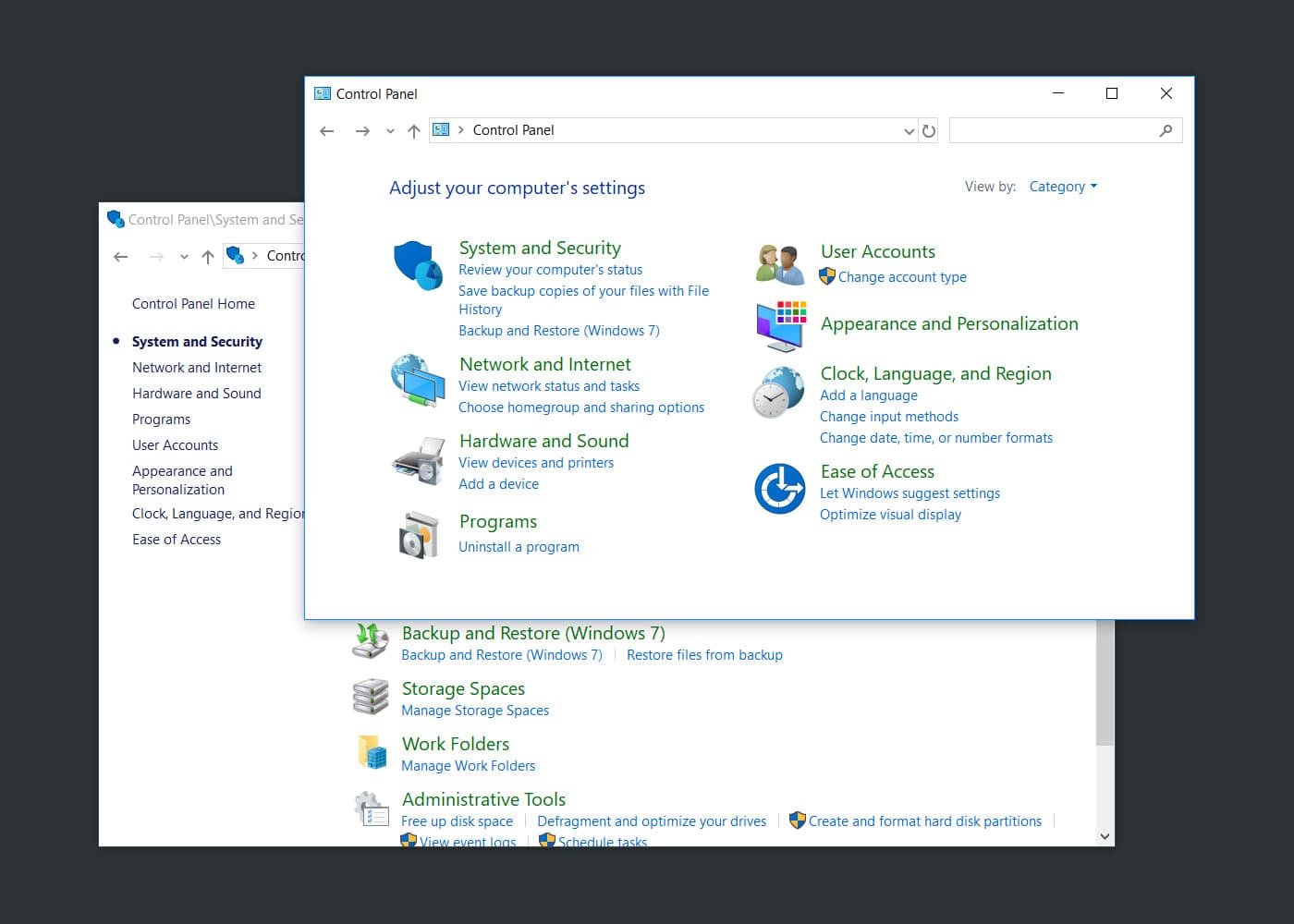

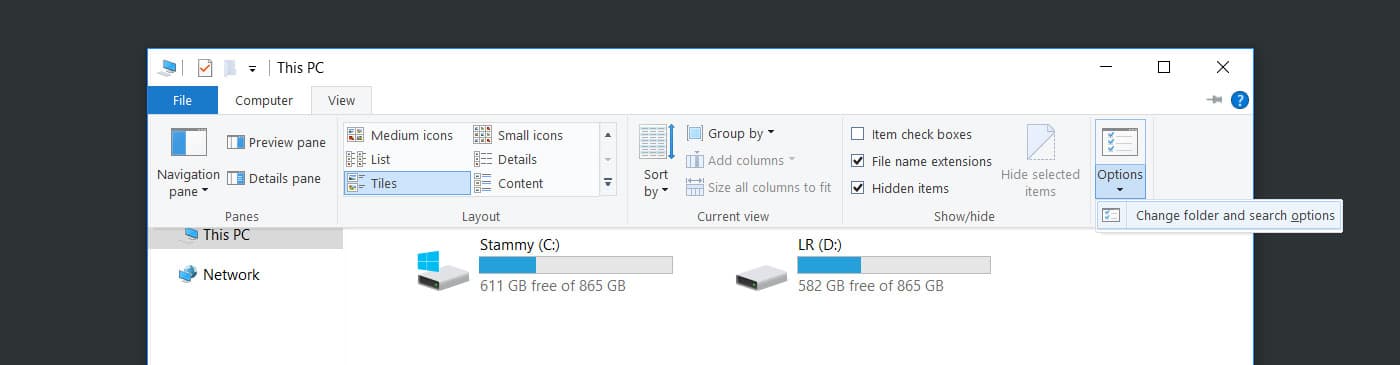

Misc updates

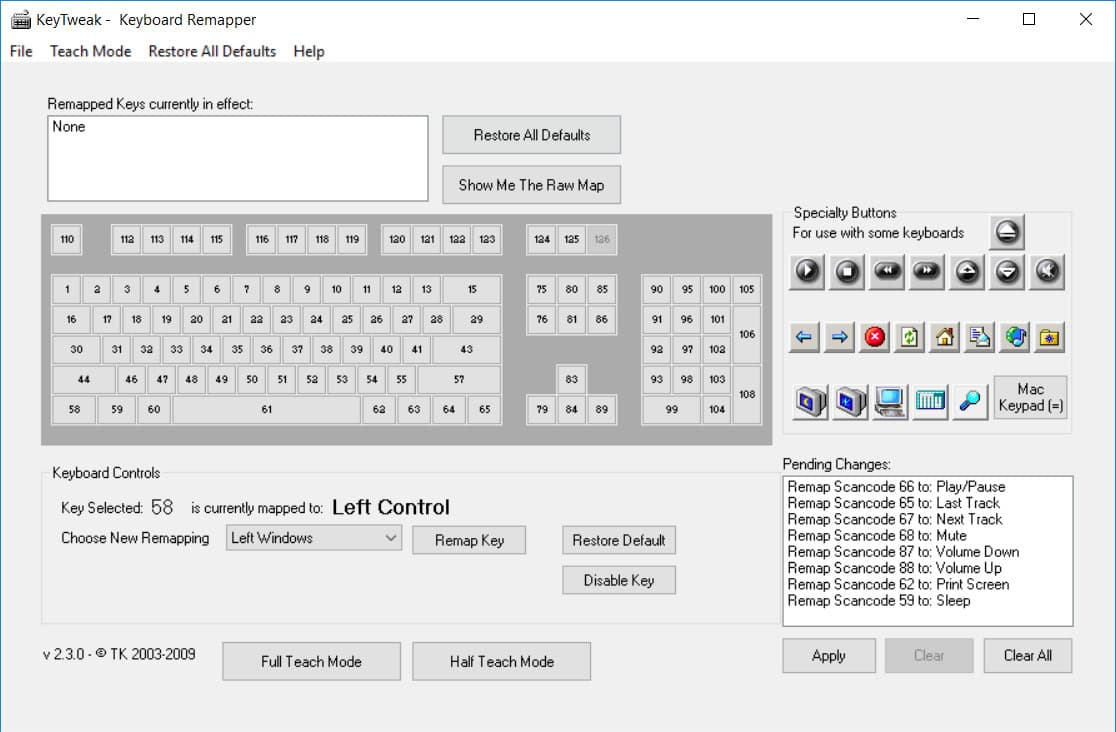

One thing I have discovered since writing this post is Windows 10 Debloater. It's an excellent script you run on a clean install of Windows 10 that will, as the name implies, debloat and uninstall all the extra cruft and silly games that Windows comes with by default.

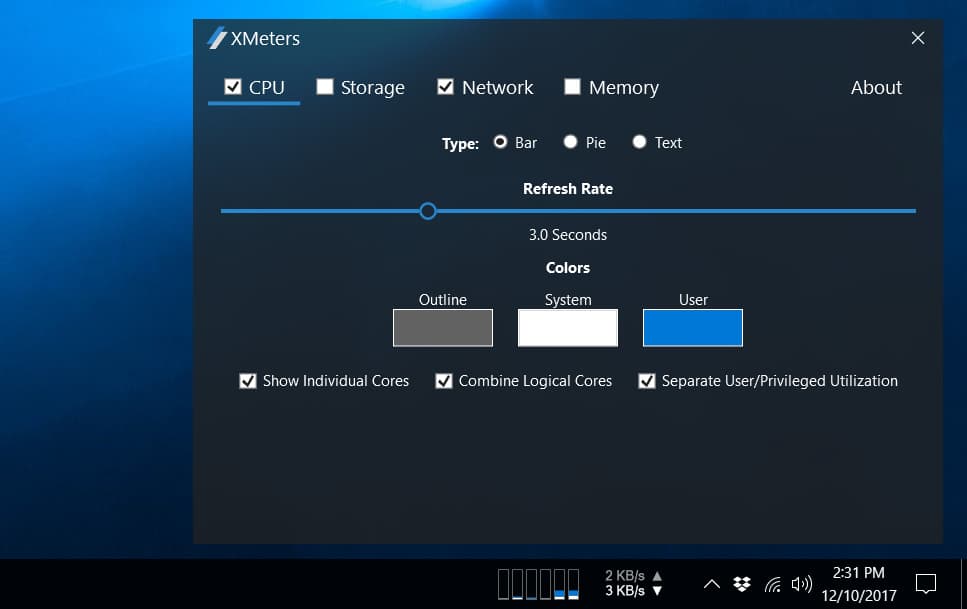

I also began using QuickLook for file previews instead of my previous recommendation, Seer. I have also become accustomed to modifying the time and date display in Windows by using T-Clock Redux. And finally, ProcessHacker gets an honorable mention and is something I've recently been using to dive deeper into system activity.

Read the rest

This update really doesn't change much for this post: just that I got updated parts. Most of this article will be accurate for a long time, so please continue reading. I had spent a few months of spare time writing it and hope you find some value in it. As always, if you have any questions as you're reading feel free to send me a Tweet!

A note to the reader

This is a long blog post. The longest I've written on this site—over 32,000 words—and consumed many of my weekends for about 4 months. Typically these "I built a computer" posts are rather useless a few months down the line when new hardware comes out and it's nothing but an old parts list. While I can't avoid that, I aimed to provide enough information about my reasoning for why I chose certain parts or how I configured things so that this post may still be helpful a year or three down the line. Enjoy!

If you like this post, please share it with your friends, followers or anyone that might be interested.

What I use my computers for

For the last few years I have more or less had some variant of the same setup: a beefy desktop computer for heavy lifting and a small laptop for travel and casual use. My desktop usage, in order from most to least frequent, is largely comprised of Adobe Lightroom, web development for this website, Adobe Premiere Pro and some occasional gaming.

While I did love my 5K iMac, I hated that the only way to upgrade a year or two later was just to replace the entire thing. I hated that even the newest models were typically behind Intel's release schedule and you couldn't get the absolute latest and greatest hardware, much less be able to overclock them a bit for even more performance.

Apple has failed to provide the option for high-performance, user-upgradeable machines for years and even the new iMac Pro continues that trend. Perhaps the rumored upcoming Mac Pro will be different but I just don't see a world where you'll ever be able to hear about the latest Intel chipset and processor launch, immediately buy a new processor and motherboard and upgrade your Mac that weekend.

I'm not the only one with this mindset. More and more creative professionals that demand the most from their machines are getting over Apple for their high-end computing needs. Filmmaker Philip Bloom recently moved to a Windows machine. Photographer Trey Ratcliff did the same and I've been seeing more and more friends in the creative space do the same.

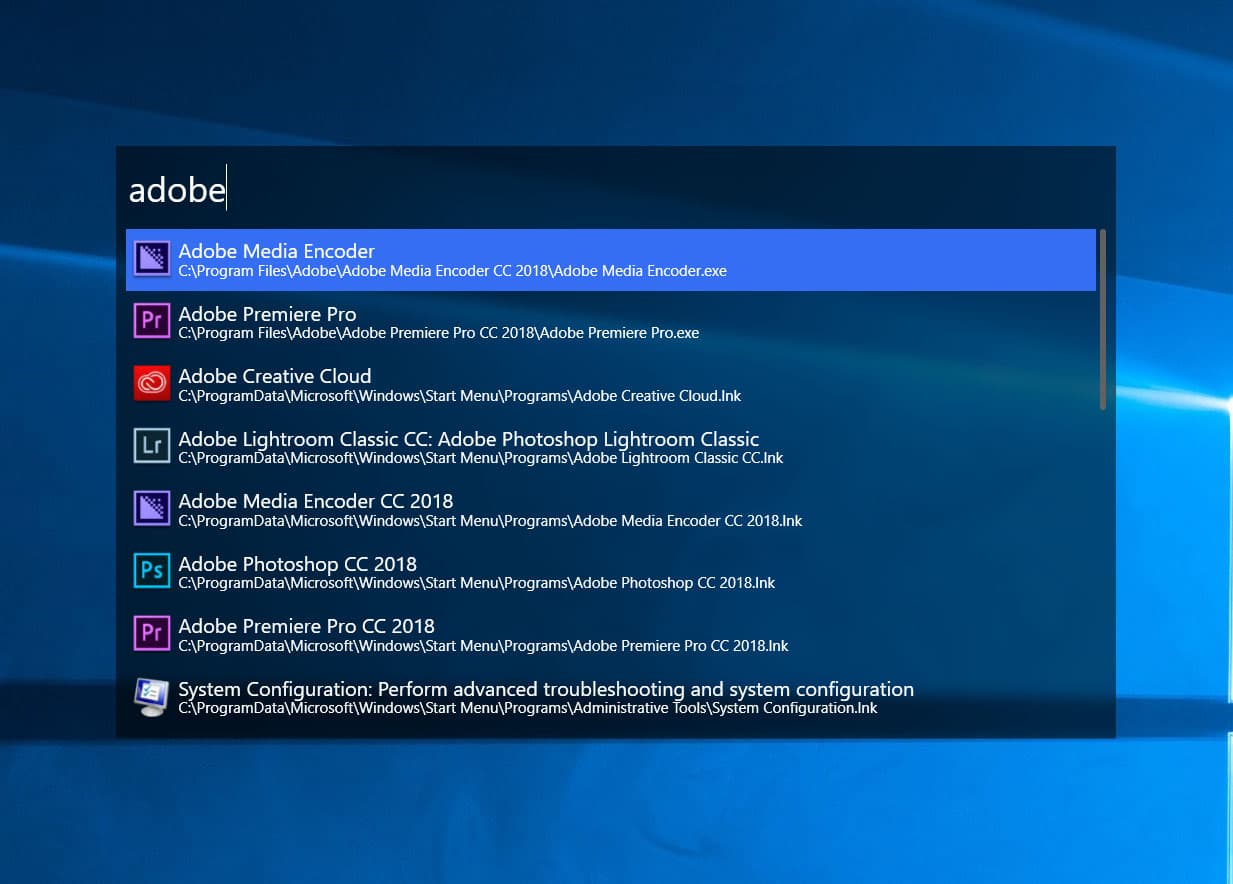

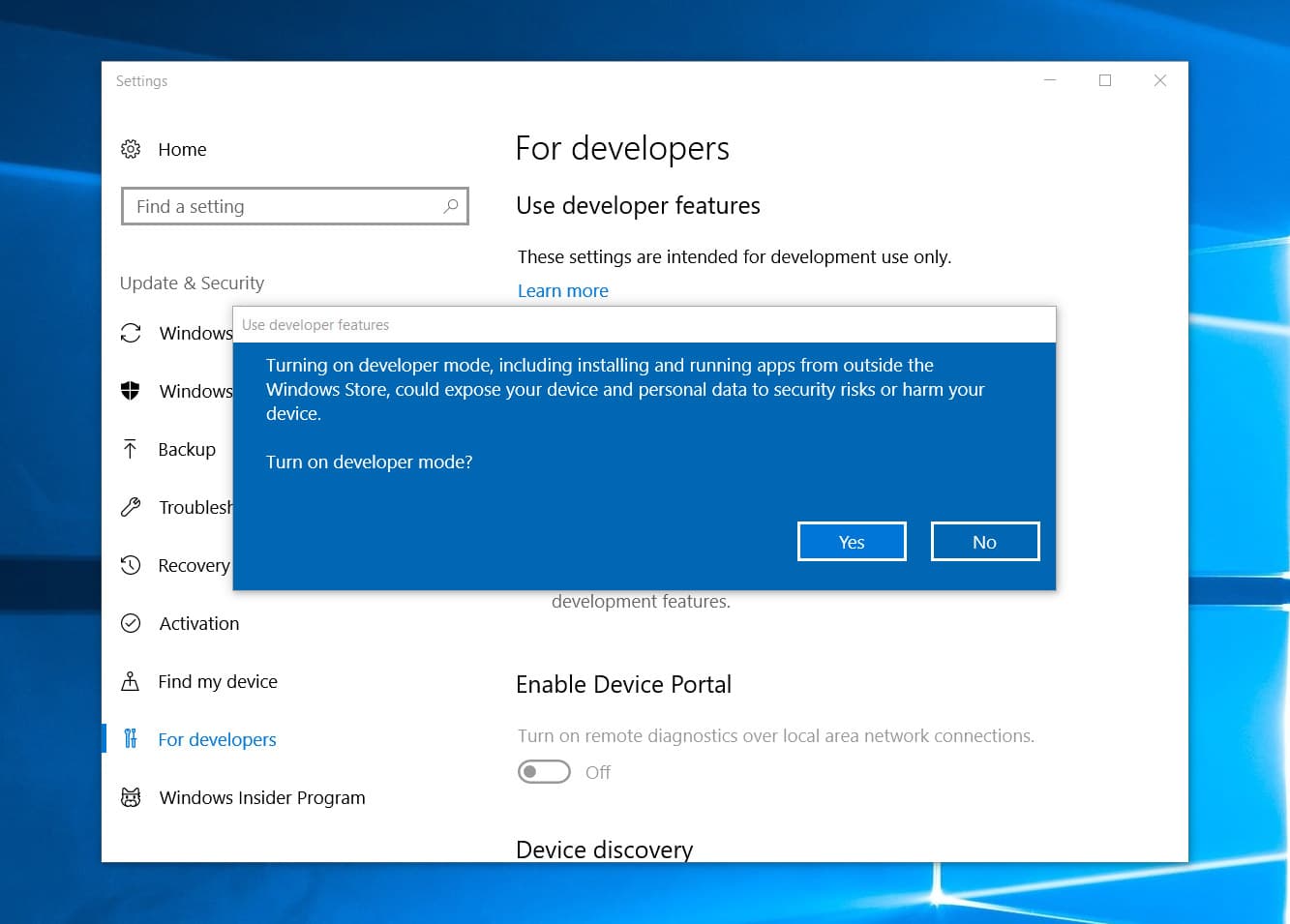

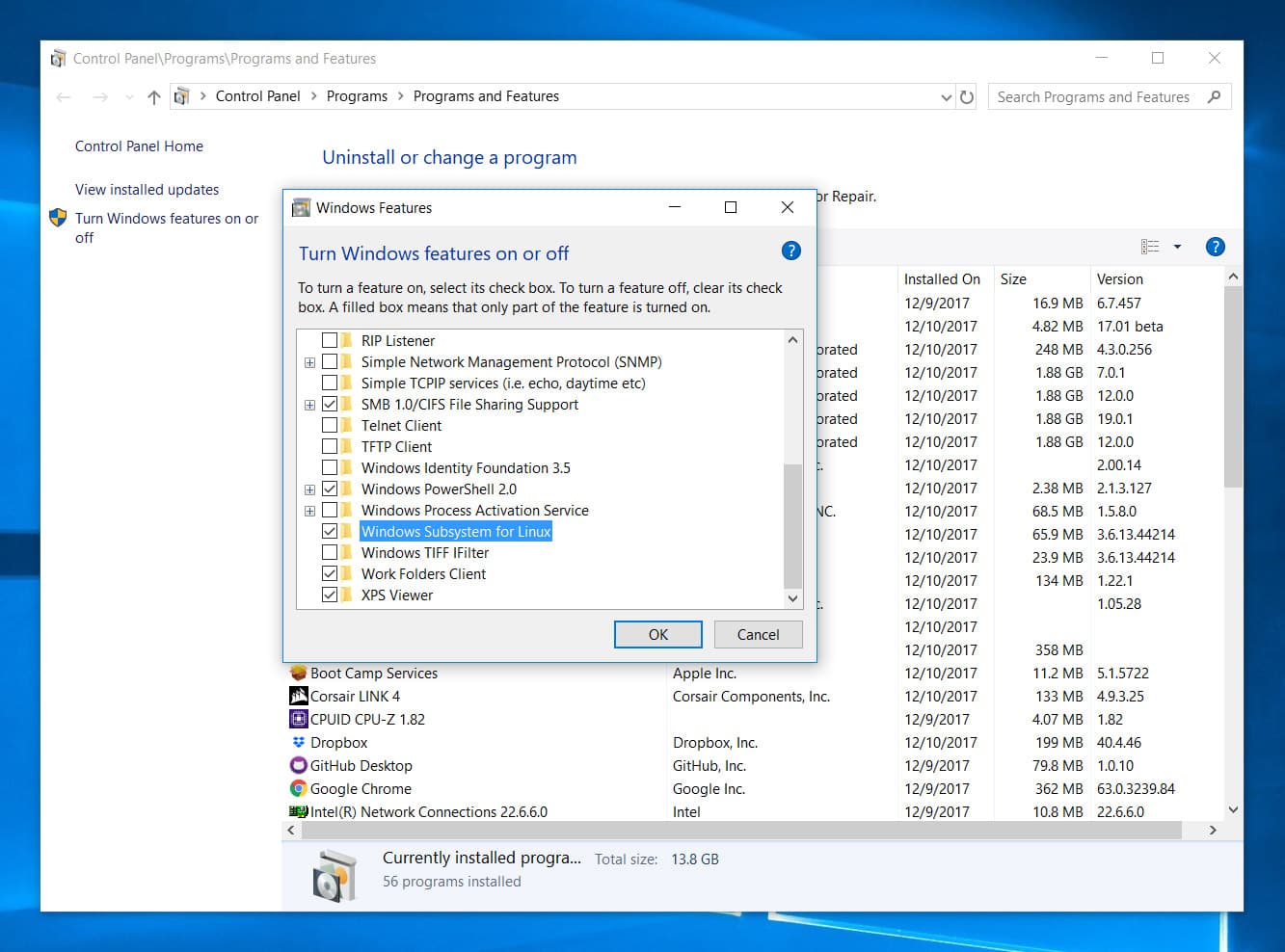

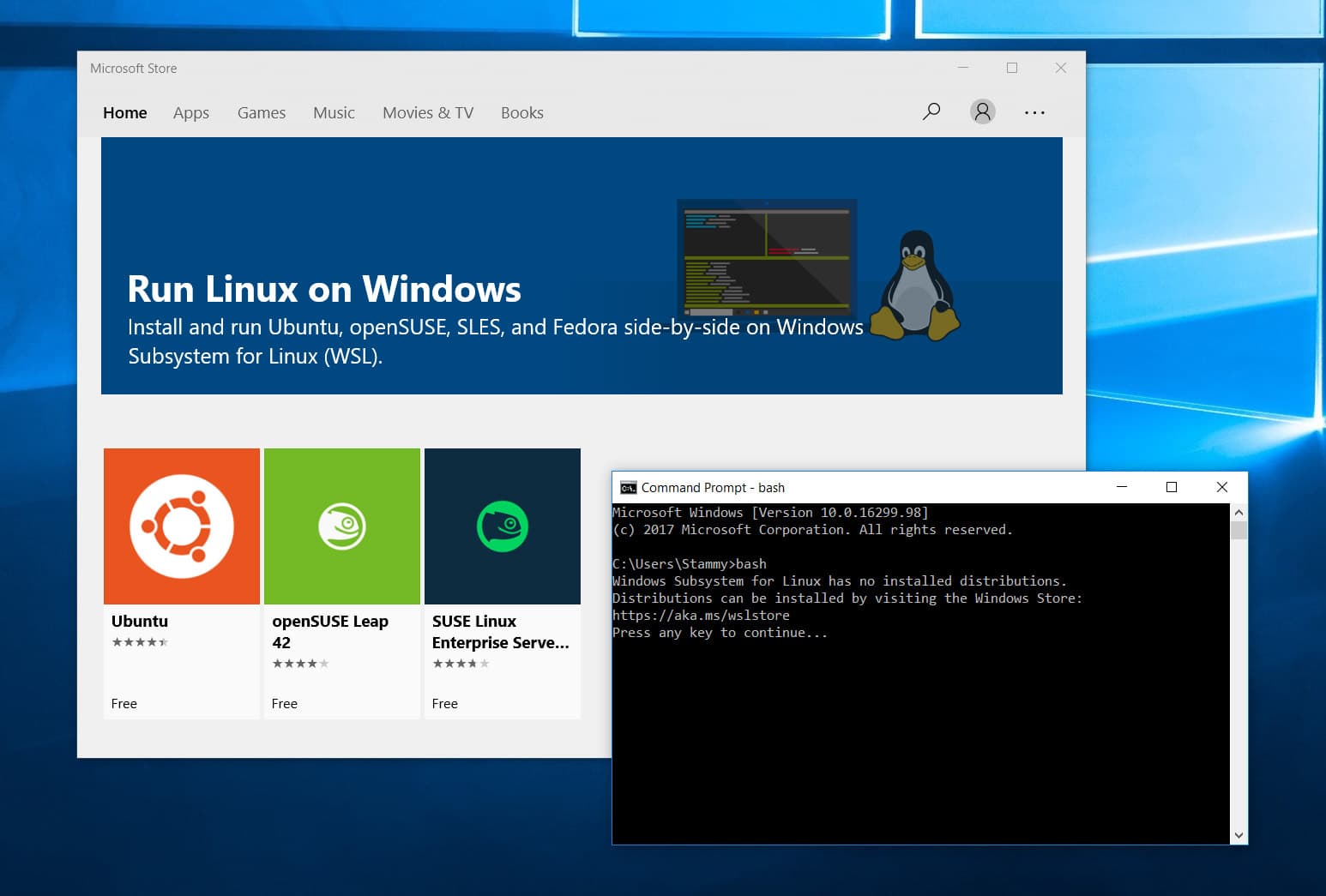

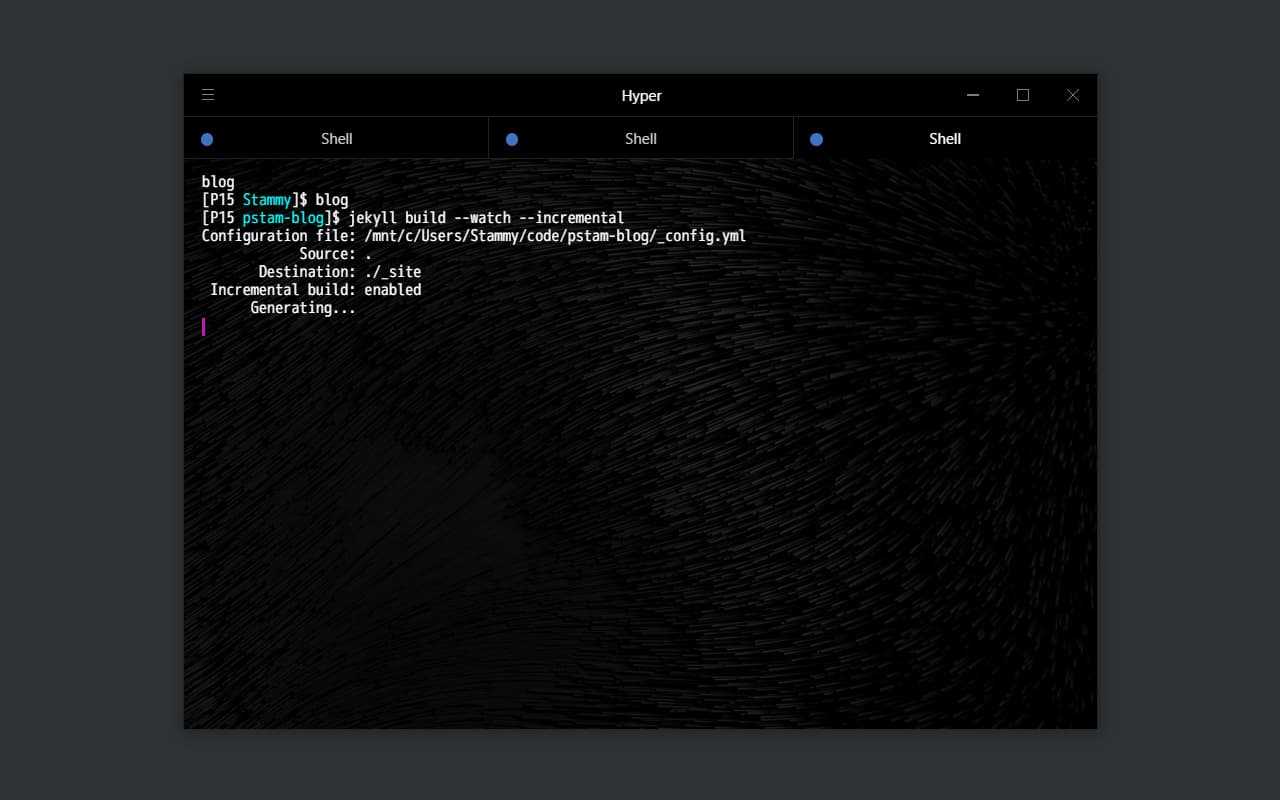

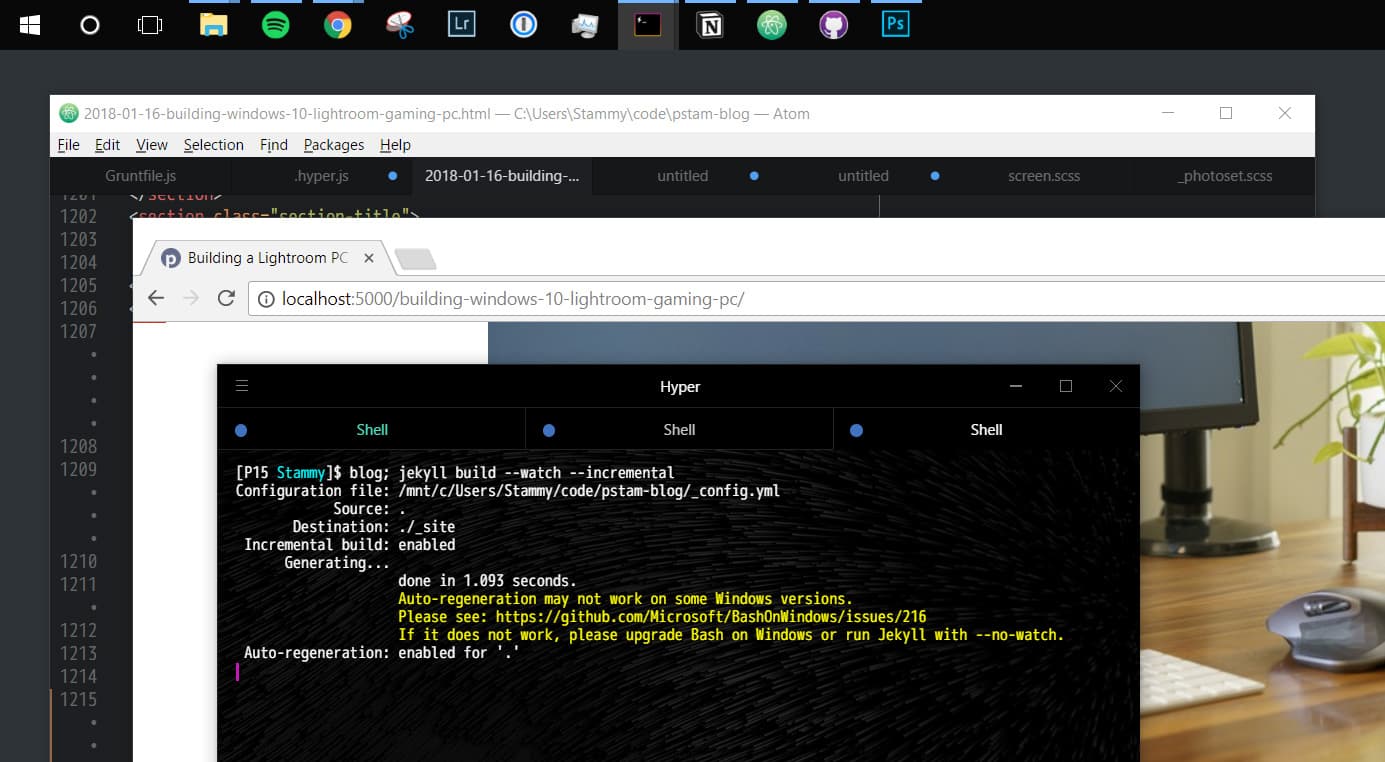

Nothing is really holding me to macOS on the desktop. I go in there, edit some photos or do another large task then I retreat to my 13" Macbook Pro.1 The Adobe suite works on Windows and there is now official Linux support via WSL on Windows 10 so I can run my development environment easily.

I would be lying if I didn't mention one of the main reasons I wanted to build a PC: finally having a modern full-size graphics card, for both GPU acceleration in creative applications as well as for gaming. With my iMac I casually played a few games on Steam, but with paltry settings. Even if I were to purchase a new high-end Mac, you just can't get the best graphics card on the market (even with the new iMac Pro2). Much less just be able to easily swap it out with a better card a year later.

When I began planning this new build around April 2017, I considered making it a dual-boot Hackintosh and Windows 10 PC. At the time a hackintosh build sounded promising: Kaby Lake processor support and Nvidia drivers for Pascal GPUs for macOS had just been announced.

Then I began thinking of how I actually use my computer. The idea of constantly rebooting to hop into Windows for a bit to play a game, then reboot to go back to macOS seemed like a major inconvenience. It also meant that I couldn't just upgrade to the newest hardware — I would have to wait for hackintosh support to arrive. Not to mention the associated hackintosh annoyances I've dealt with in the past: tricky software updates and reliability issues. I knew what I needed to do.

The goal: Build a fast, yet quiet and understated desktop PC with a healthy overclock aimed at improving my photo workflow while giving me the ability to upgrade parts of it later on.

What makes Lightroom fast?

But first, let's talk about what Lightroom needs to thrive.

One thing to note, and this kind of defeats the purpose of this whole post: I've never seen any hardware improvement, no matter how drastic, turn Lightroom into a pure speed demon when dealing with the kind of huge RAW files I work with. I might experience single digit to low double digit percentage improvements in certain tasks, but nothing that would blow my socks off. Nothing instant. If someone claims their Lightroom setup is instant, they're lying or they're working with tiny 12-megapixel JPGs. Here's more on the topic if you're interested.

In addition, any performance improvements gained on new hardware are often then negated when upgrading to newer and newer cameras that shoot higher megapixel photos and higher resolution and bitrate videos. It's a vicious cycle. I have even thought about downgrading to a lower megapixel camera to make editing easier; but I love being able to have room to crop photos and videos. And the extra megapixels helps when I frame some of my photos.

What would make Lightroom really fast is the software itself receiving dramatic optimization and performance updates. It has been around for ages, I'd imagine there is quite a bit of code cruft that Adobe would love to refactor and rethink. Adobe has even stated that they know Lightroom is slow and they're working on it. Nothing I can do here but cross my fingers and wait for software updates.

How I use Lightroom

Here's what I do in Lightroom that can feel slow.

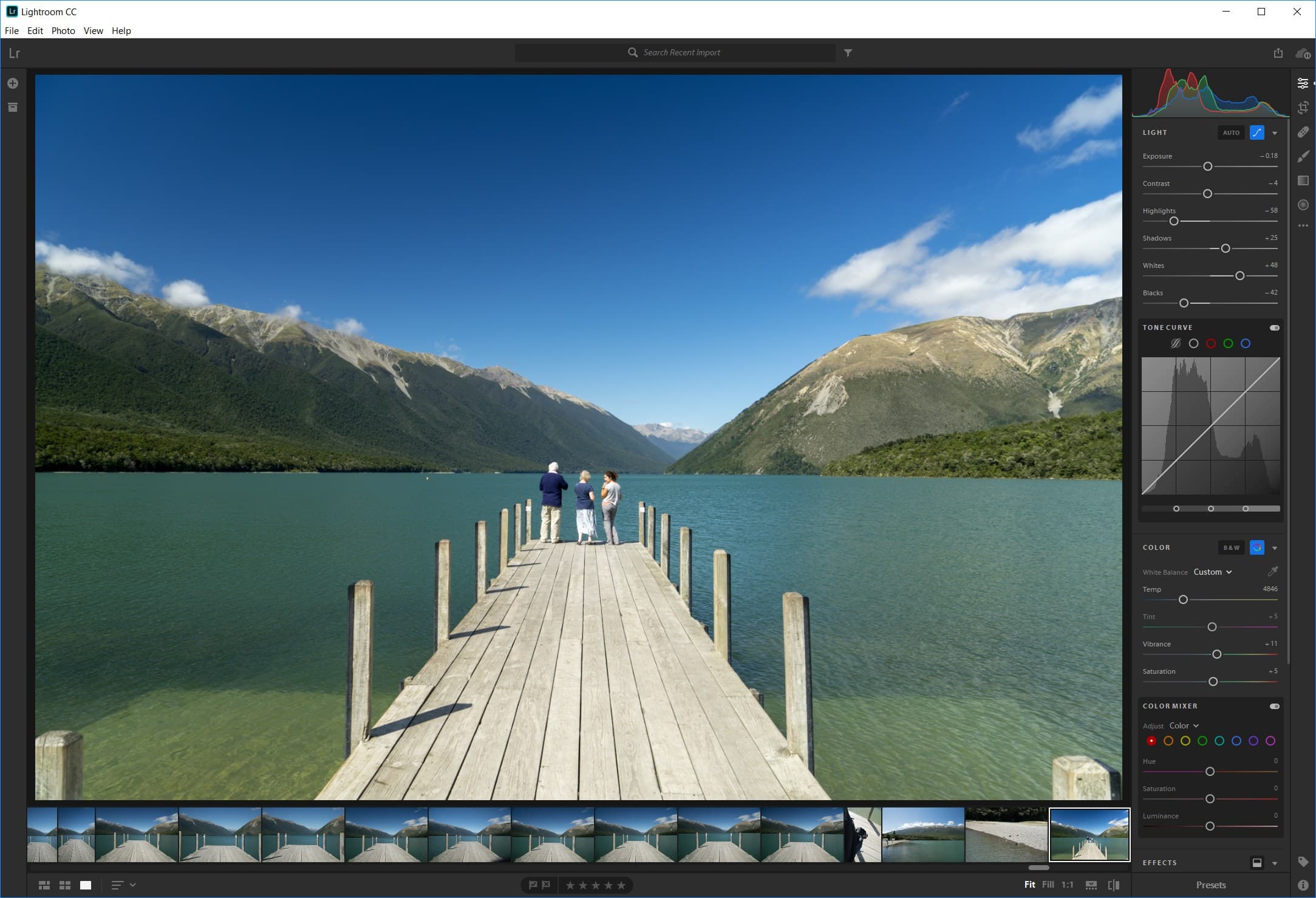

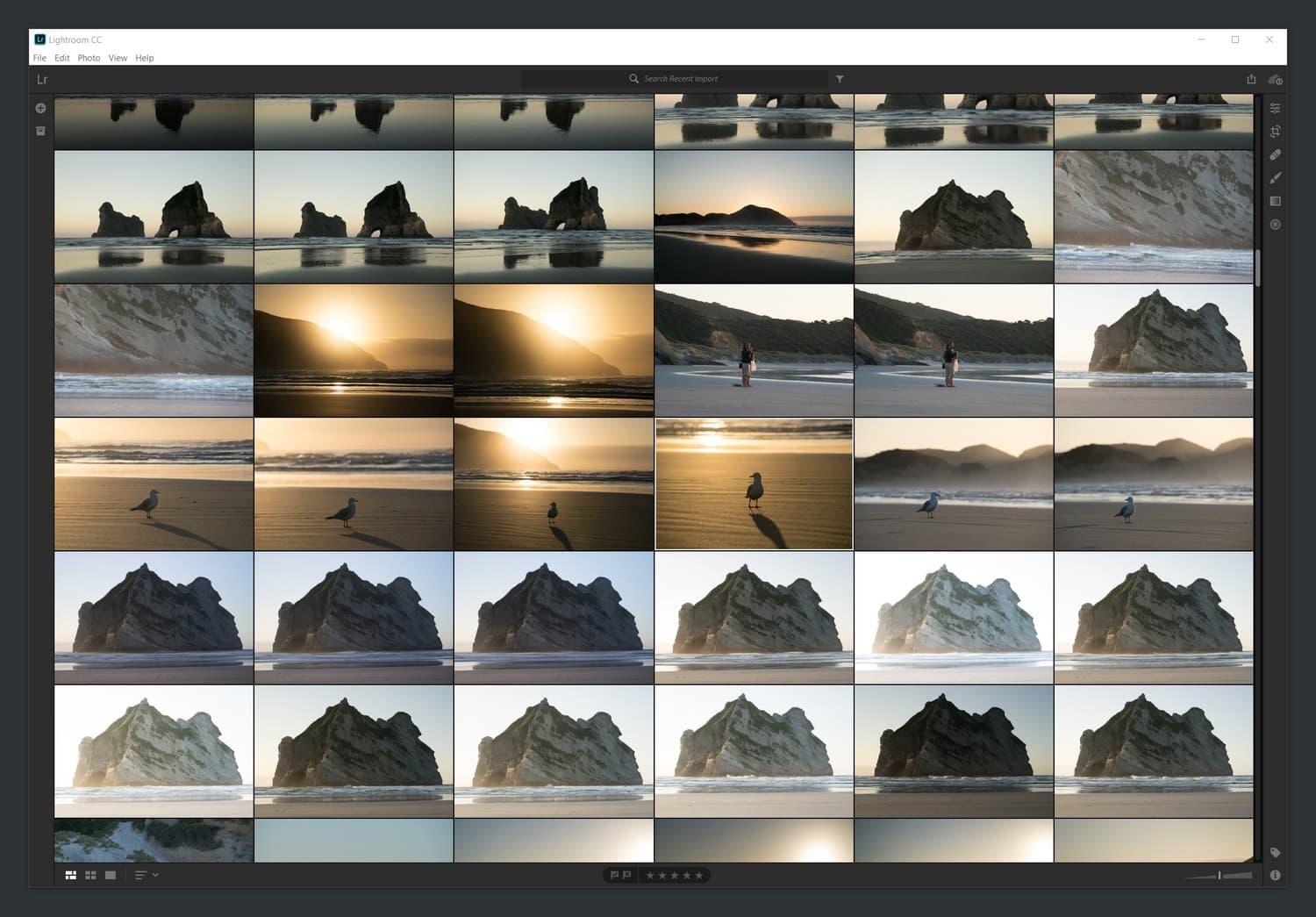

I spend a lot of time in Lightroom. What exactly do I do to my photos? Increasingly less and less (more on that below), but there's still quite a few tasks from culling to pick the best shots out of hundreds or thousands all the way to numerous adjustments made individually on each photo.

I have been interested in photography for over a decade but didn't really start taking it seriously until I built out my photoblog and started crafting photosets of trips. At first I enjoyed making photos seem surreal and dramatic. I was all too eager to yank the saturation and clarity sliders and even use programs like Photomatix Pro and Aurora HDR that started out basically encouraging the creation overly gaudy HDR images.3

Over the years I have tried to hone my photography aesthetic to be more realistic and only edit to try to capture what it was like to be there and see something with your own eyes — recovering highlights and shadows, removing spots created by a dirty lens in a long exposure, adjusting color temperature to communicate the warmth of that day, remove noise to share the clear night with bright stars and so on. And sure, sometimes that vibrance slider might find it's way to +15 to accentuate some glacial blue water, but I rarely touch the saturation slider these days.

And like designing4 a product interface, there's just as much work if not more that goes into keeping things simple and have it communicate effectively. Sometimes I'll spend the most time leveling a shot and finding a good crop.

Sony A7R III with some of my lenses.

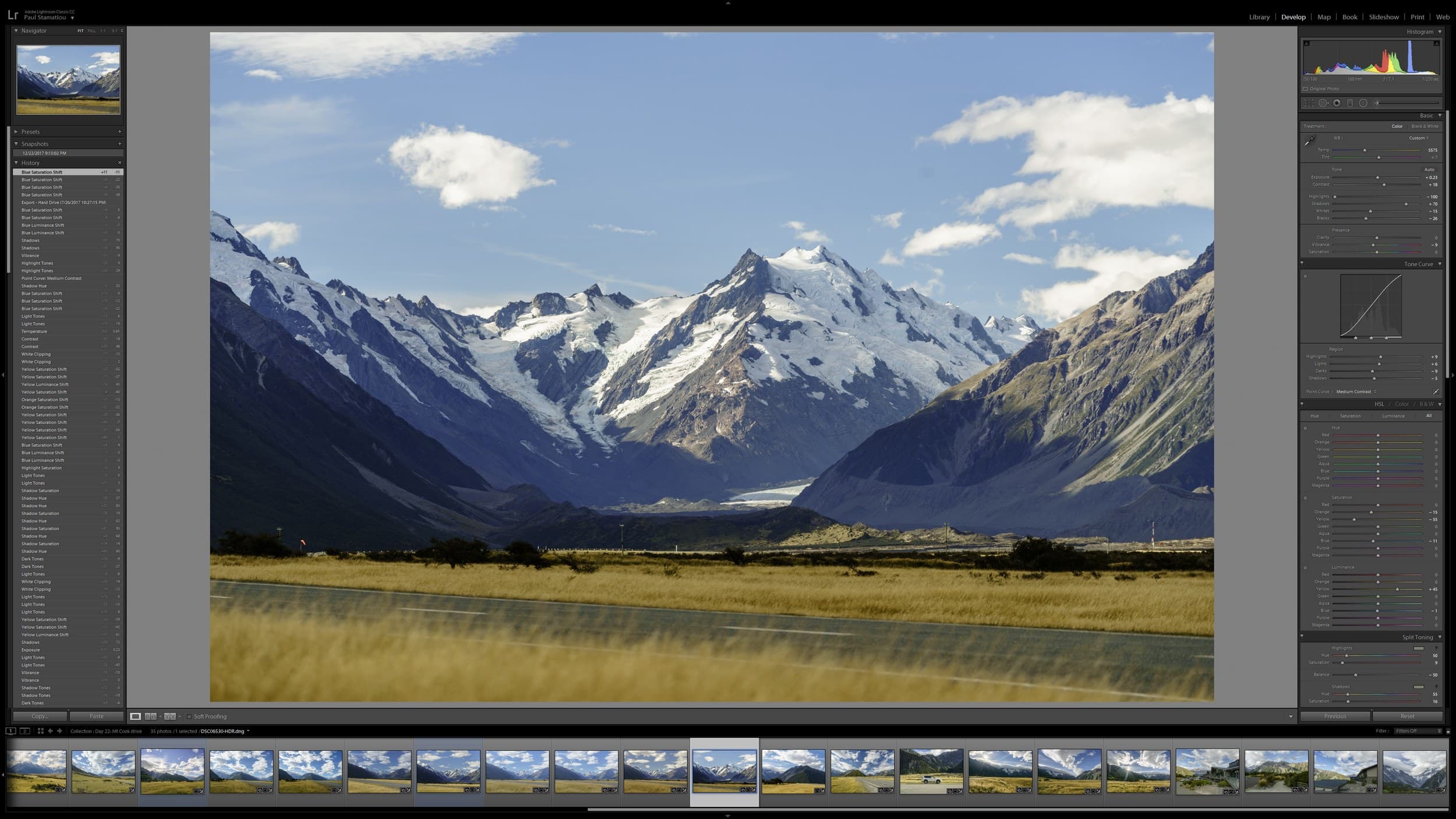

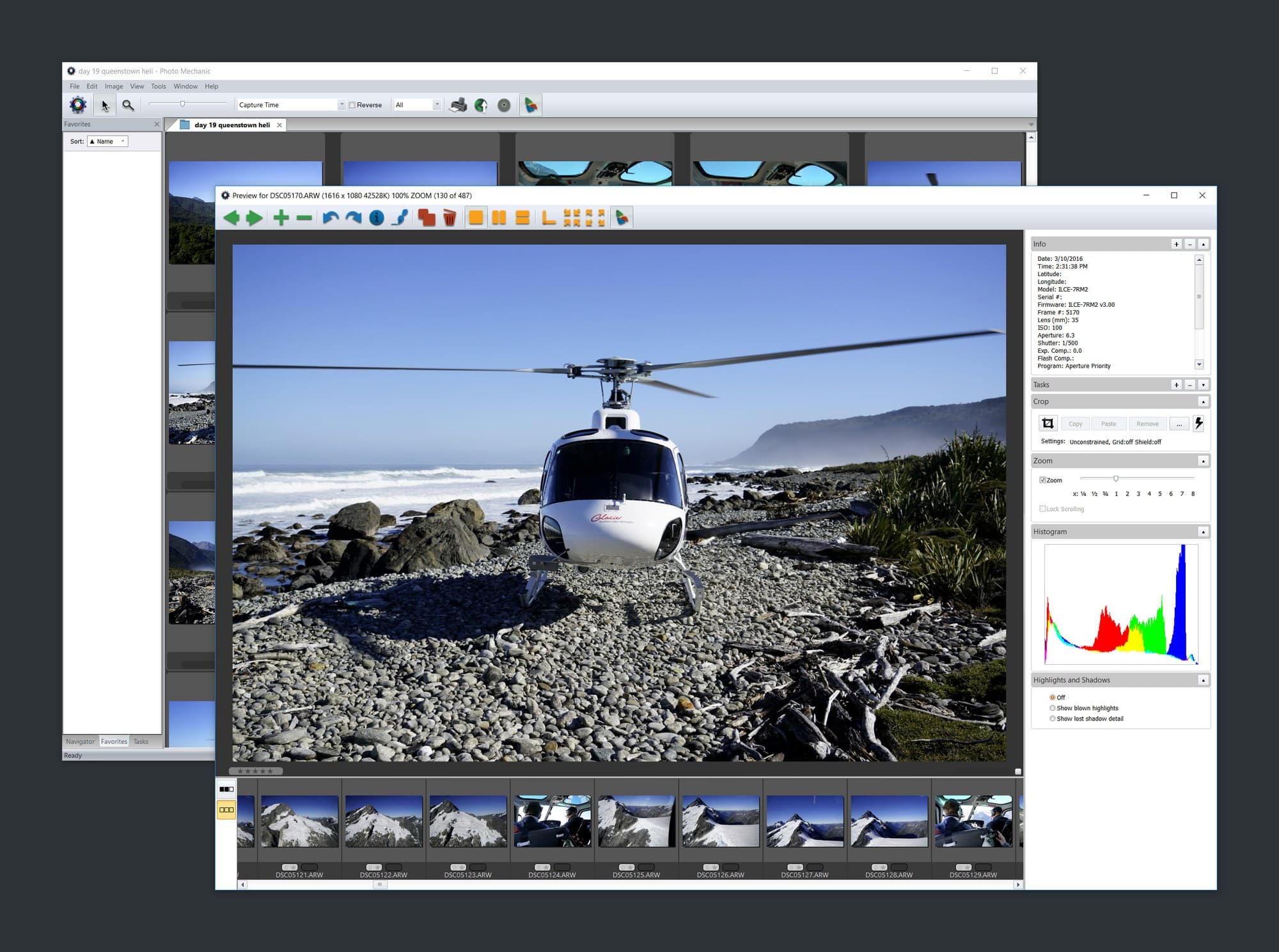

I love working in Lightroom on a high-res display in full-screen mode. I often zoom 100% into one of my Sony a7R III's massive 42MP RAW photos to find the sharpest and most in focus of several similar shots.

Unfortunately, all three of these behaviors incur a significant performance cost right off the bat.

If you're familiar with Lightroom you probably know about the different modules of the app. I spend the vast majority of my time in Develop module and some of my time in Library module. The different modules act as tabs — changing between them brings up a new set of functionality and contextual side panels.

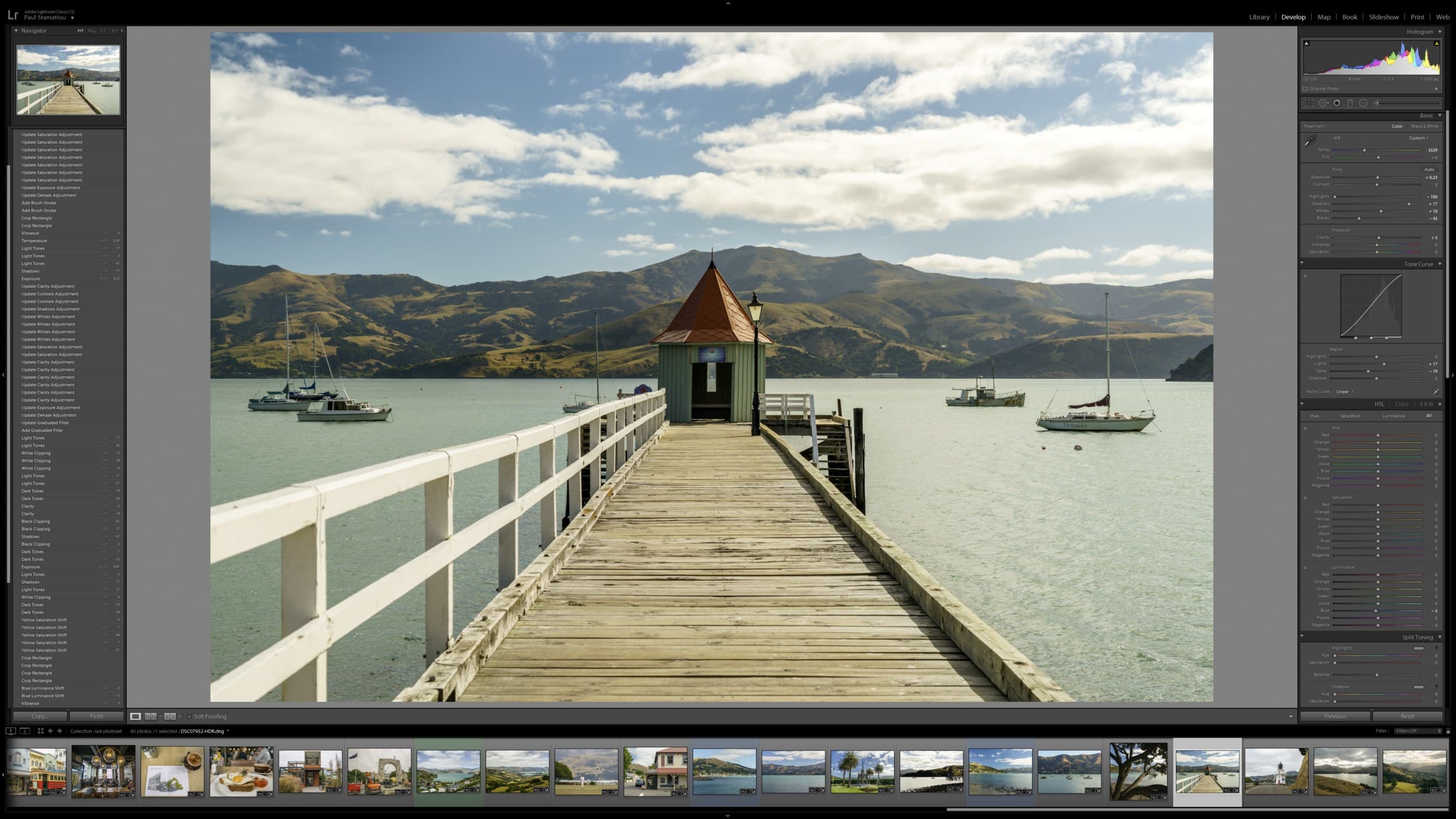

Lightroom Classic CC fullscreened at 3840x2160 in the Develop module working on an HDR image. Photo: Mount Cook, New Zealand

When I’m doing basic culling, I try to stick around in the Library module where there are performance benefits at the expense of not being able to do any real editing to the shots. It's possible to make filmstrip scrolling and browsing in the Library module fairly speedy by generating previews either manually or on import.

Generating previews in advance means that Lightroom doesn't have to fire up the Camera Raw engine to process and then cache a large compressed RAW file each time you click on a photo, an action that can take up to 3-5 seconds per photo on a large screen.

There are several kinds of previews in Lightroom, but I generally have 1:1 previews created when I import a new set of photos. They're processed, full-size versions of the RAW photo. It takes a lot of time to generate them but it's done all at once. I don't mind that upfront cost as I can just go make a coffee, read and come back in 30 minutes.

However, I often hop over to the Develop module while culling to see what the photo could look like with some basic adjustments or a crop to see if the shot is worth keeping. Unfortunately, 1:1 previews are not utilized in the Develop module and even if I had generated Smart Previews which are used in the Develop module, they only create previews up to a max size of 2540px on the longest edge of each photo.

So where does this leave me? Spending the majority of my time in the Develop module where generated previews won't help on a large display with frequent 100% zooming. The only savior we have here is that the Develop module is the only part of Lightroom with GPU acceleration:

Lr can now use graphics processors (GPUs) to accelerate interactive image editing in Develop. A big reason that we started here is the recent development and increased availability of high-res displays, such as 4K and 5K monitors. To give you some numbers: a standard HD screen is 2 megapixels (MP), a MacBook Retina Pro 15" is 5 MP, a 4K display is 8 MP, and a 5K display is a whopping 15 MP. This means on a 4K display we need to render and display 4 times as many pixels as on a standard HD display. Using the GPU can provide a significant speedup (10x or more) on high-res displays. The bigger the screen, the bigger the win.

Even with that, it's still very early for Lightroom GPU hardware acceleration and it leaves much to be desired. GPU acceleration can make most Develop controls quicker but it seems that can come at the slight expense of two things: the time it takes to load full-resolution images as well as moving from image to image. Also, actions like panorama stitching, HDR photo merging, the adjustment brush and spot removal tools do not seem to get any boost here.

Update Feb 2020: Lightroom Classic now brings full GPU acceleration for Lens Correction and Transform adjustments.

Inside the Develop module

Okay, here's my typical Lightroom workflow

After I have mostly completed the culling process and selected the better shots to keep in my collection,5 I go over each photo with a series adjustments as needed. I most commonly visit these settings:

-

Camera Calibration → Profile: The profile determines how Lightroom processes the RAW and serves as a basis for all your adjustments. Depending on the camera you use and Lightroom's support for it, you will see different options here. I believe the goal from camera manufacturers is to have the profile mimic the camera's own creative style settings had you had any enabled and shot a JPG; those settings don't affect the actual RAW.

I rarely use the default Adobe Standard profile and have Lightroom configured to use Camera Standard as the new default profile. Depending on the photo I may use something like Camera Landscape for more contrast and color but often find it too saturated and have to manually compensate for that. There's a wealth of information about Camera Profiles out there, but here's a starter.

-

Remove Chromatic Aberration & Enable Profile Corrections: I tend to have these on by default. The latter will load a lens profile if one exists to correct any distortion (like barrel or pincushion) with your lens. One school of thought is to rarely use profile corrections as they can reduce detail and also lead to some minor cropping at times. But I find this to be a bit nitpicky and won't readily be able to discern a significant loss of detail by enabling it.6

-

Transform: On certain occassions, like having a shot of a building that was taken at a slight skew, it can come in handy to enable a perspective correction. With more complex subjects, such as trying to tame two similar but slightly off leading lines in a photo, Lightroom has the guided transform feature. However, I try to only use these if the effect does not do too much. It can look pretty unnatural in those cases.

-

Basics: The essential controls that I fiddle with on every shot: Exposure, Temperature, Tint, Highlights, Shadows, Whites, Blacks and to a much lesser extent clarity and vibrance. At times I will jump directly to the Tone Curve but I often really only go there to tweak one or two RGB curves a bit, not everything at once.

-

HSL: Sparingly, I'll find myself wanting to reduce the luminance, saturation and rarely hue of a particular color in a shot. Most commonly I'll use it to decrease the prominence of a particular color in a scene I find distracting. Like if I adjusted the color temperature of a photo to be a bit warmer and it's making some yellow/orange foliage in a shot look obviously too saturated. Or if I wanted to adjust the luminance of blue to make a body of water darker or brighter to maybe compensate for other adjustments that may have made it appear a bit off.

-

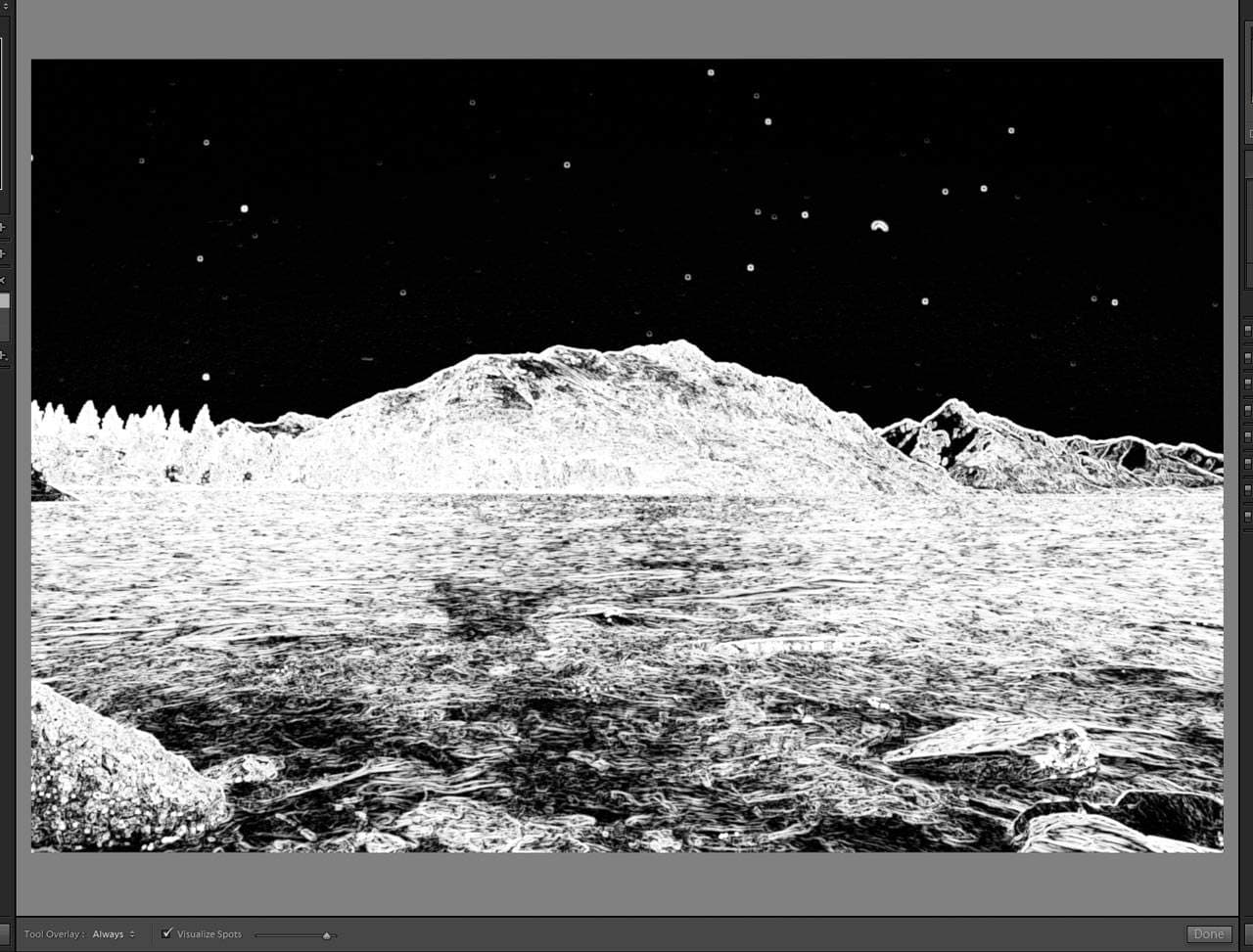

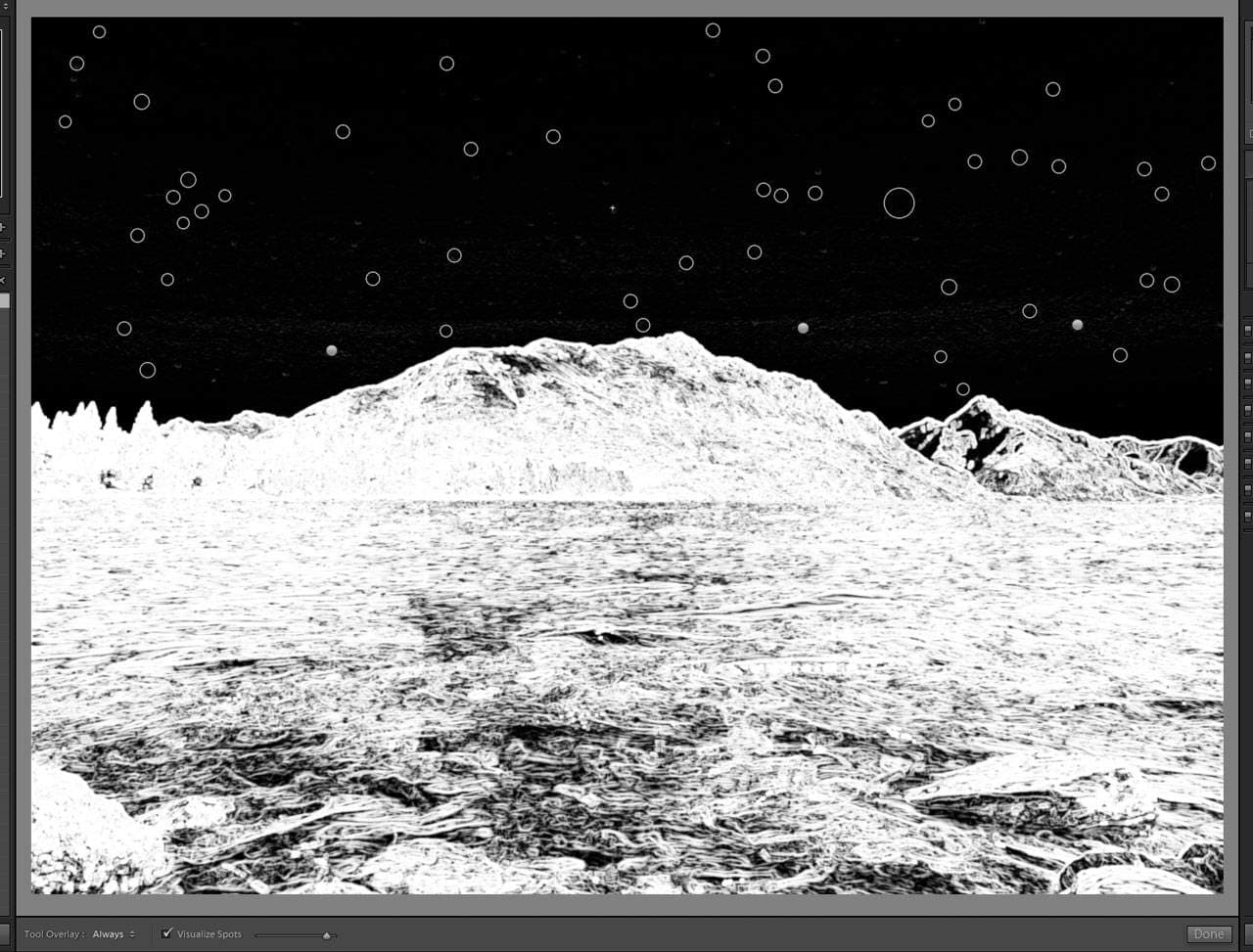

Spot removal, adjustment brush, graduated filter: Spot removal gets a good amount of use. Most frequently to clean up anything caused by a dirty lens. When you're out shooting all day you tend to get some dust specks, mist and other tiny debris that only becomes obvious when capturing long exposures. I use the "Visualize spots" mode of the spot removal tool to easily track down and remove these spots.

I use the adjustment brush much less, but in recent memory I used it to select a mountain range in the distance that had decreased visibility due to clouds/fog and increased the clarity and contrast a tad. But I'm using it less and less these days.

Graduated filter rarely gets used anymore, but in the past I liked placing it above the horizon to make the top of the sky a bit darker, reduce highlights, boost contrast and clarity to make clouds pop.

Lightroom Classic CC has a new range mask modifier for these actions that makes them easier to control that I've used a few times.

The visualize spots mode for spot removal. Left: Dirty lens from shooting near the water a lot one day with lots of spots obvious in the sky (the largest spot was actually a tiny scratch in my polarizer). Right: With corrections applied.

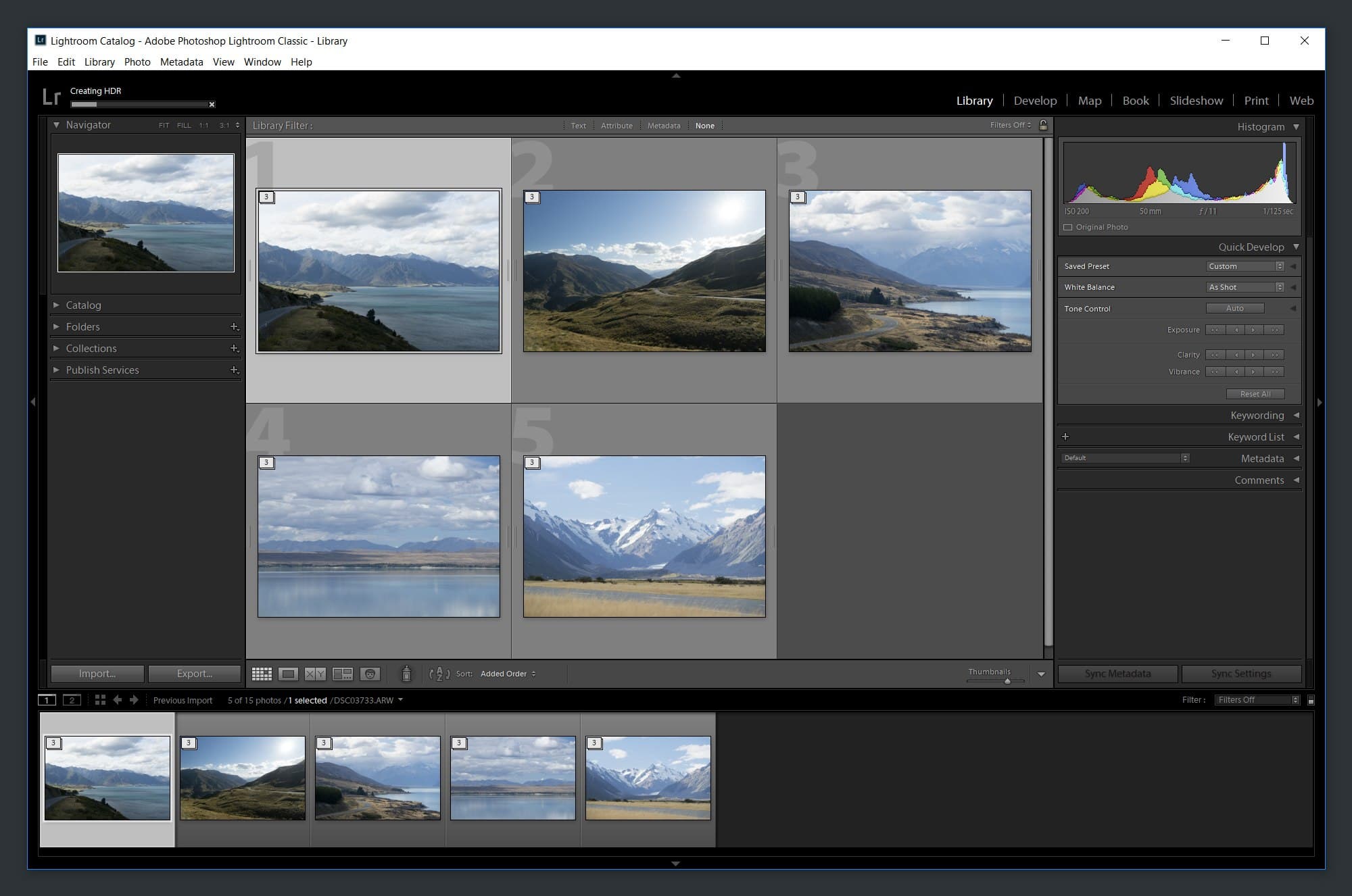

- Merge HDR: When necessary depending on the scene, I will turn on bracketing and shoot a ton of 3-shot brackets. I've done several 5 shot brackets but didn't find enough value in the difference to make up for all the extra storage and time required for those. In Lightroom I will stack each 3-shot bracket then select a few of the stacks and begin them in parallel with the headless HDR processing mode. Just press CTRL (windows) or Cmd (mac) + Shift + H when you have a few stacks selected. This is only good if your HDRs are from the same scene and fairly similar as the headless mode skips the HDR Merge Preview dialog and just goes with whatever setting you last used.

Even though I consider this light editing, that's still a ton of actions to do on one photo. Even something seemingly as simple as a profile correction can end up increasing the number of calculations Lightroom has to do on all subsequent actions. Adobe even recommends a particular order of operations in the Develop module to speed things up:

The best order of Develop operations to increase performance is as follows:

- Spot healing.

- Geometry corrections, such as Lens Correction profiles and Manual corrections, including keystone corrections using the Vertical slider.

- Global non-detail corrections, such as Exposure and White Balance. These corrections can also be done first if desired.

- Local corrections, such as Gradient Filter and Adjustment Brush strokes.

- Detail corrections, such as Noise Reduction and Sharpening.

Note: Performing spot healing first improves the accuracy of the spot healing, and ensures the boundaries of the healed areas match the spot location.

Once I've adjusted each shot to my heart's content — and gone back and forth over each shot multiple times — I happily initiate an full-size JPG export. This takes a long time but I don't care as much compared to speed in the Develop module; I use the opportunity to take a break and do something else while the computer works.

A word about presets

Why don't you just have a few presets to pick from instead of adjusting everything manually?

Having a robust set of custom presets tailored to your personal photography aesthetic can save a ton of time when faced with a new set of imported photos. But that's not really my thing. I simply don't like using presets as one-click-and-done filters. I always want to manually adjust things to see what a certain photo is capable of and not "leave anything at the table" by just using a preset I have lying around. It could get the job done but wouldn't be what I would have ended up with had I started from scratch.

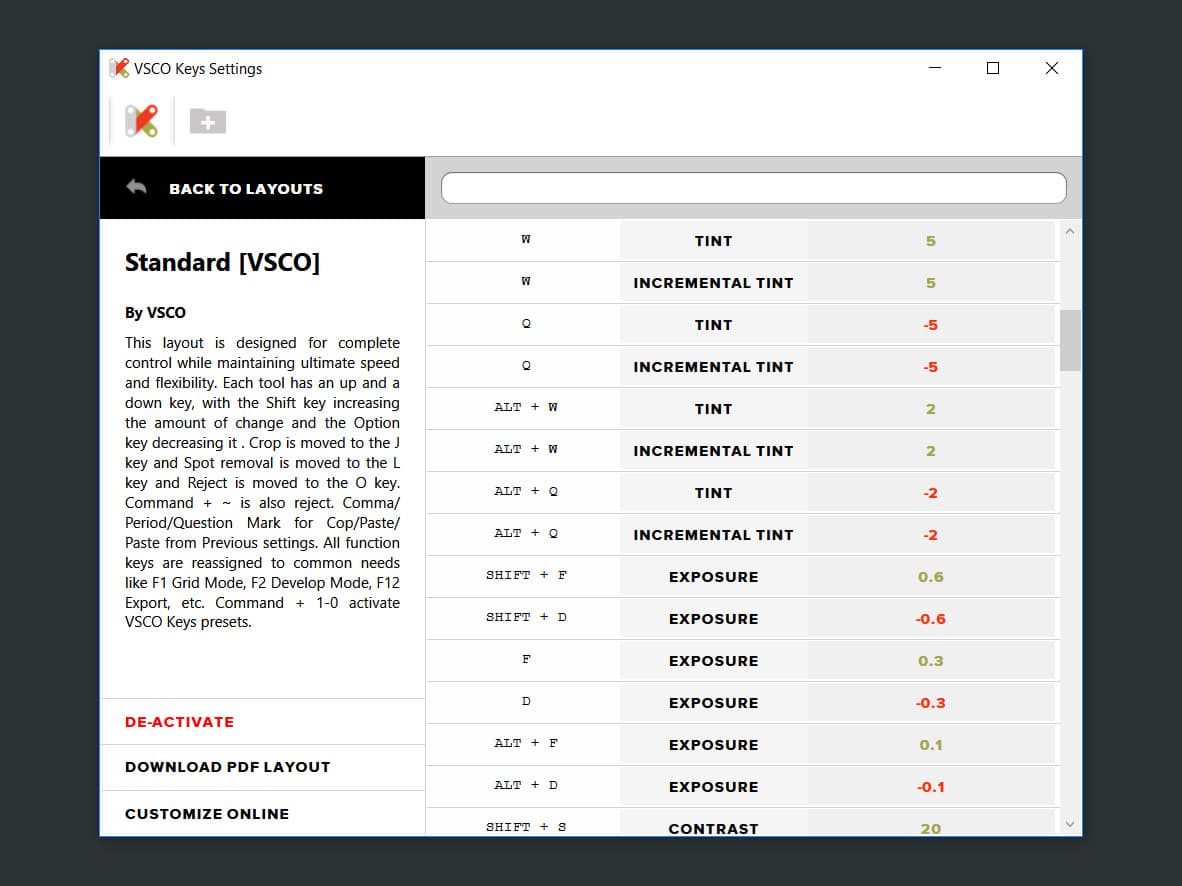

The thing that speeds up my workflow more than anything is not presets, but actually just a quick way to copy and paste develop settings with shortcut keys. I use VSCO Keys to do this using . and , hotkeys. It's quick and effective — I fiddle with settings on one photo to my liking, copy and use that as a base for any subsequent photos that are similar.

Many folks use presets in a similar way to speed up basic, repetitive things you typically do then stack them to provide a quick base to work from. You might have a handful of presets to do things like boost contrast, increase shadows and so on. And by making them for distinct actions and not set any other values, you can stack them by continuing to click on other such presets. Some folks love this flow but I never really got into it.7

Hardware considerations

What do you need most? Disk I/O, GHz, CPU cores, GPU?

As you know there are a few main levers that affect the majority of a computer’s performance: storage, RAM, GPU and CPU. To be more precise: storage throughput, RAM size, RAM speed as well as the number of CPU cores and clock speed. In the case of Lightroom, CPU plays the most important role in overall application performance and to a much lesser extent GPU.

Storage

Surprisingly, storage speed is not of the utmost importance to Lightroom as long as you have something decent. It's especially a non-issue if you have some kind of SSD and store everything on it. That can be rather expensive and you may opt to have a smaller SSD that only stores the Camera Raw cache, previews and catalog and then a regular hard drive to store the images themselves. Even with that setup the Lightroom performance difference is fairly indistinguisable. There are minimal benefits between a regular SSD and a superfast NVMe SSD as far as Lightroom is concerned.

Whatever your storage solution, you'll want a lot of storage space when dealing with hefty RAWs. Or consider investing in a NAS setup to archive shots when you're done with them.

RAM

As for RAM, you probably don't need more than 16GB for Lightroom. However, if you aggressively multitask and/or use Adobe Premiere Pro you'll want at least 32GB. You can definitely exceed that amount and go with the maximum amount supported by your motherboard.

But there are some things to keep in mind. First, RAM is not as cheap these days as it used to be for a variety of reasons, and low latency RAM is even pricier. Second, if you care about bleeding edge performance and overclocking, you should find your ideal amount of RAM in 2 sticks, not 4. It's harder to maintain an aggressive overclock with 4 channels of RAM putting a larger strain on the integrated memory controller, especially if the CPU only has dual-channel support like the Intel i7-8700K and if the CPU itself is running an aggressive overclock.

GPU

Lightroom can use a good graphics card for hardware acceleration but it really doesn't take the most advantage of it. You might notice the benefit if you are using a 4K or better display and do basic actions in the Develop module.

On lower resolution displays I've heard that having GPU acceleration enabled can actually hurt performance as your computer spends time sending data between the CPU and GPU that the CPU could have just done on its own in a shorter amount of time.

Hopefully Lightroom will make better use of high-end graphics cards with future software updates. You definitely don't need a top of the line card for Lightroom but if you're going to get one anyways you'll want to learn towards an Nvidia card. Adobe software seems to be more optimized for Nvidia graphics cards.

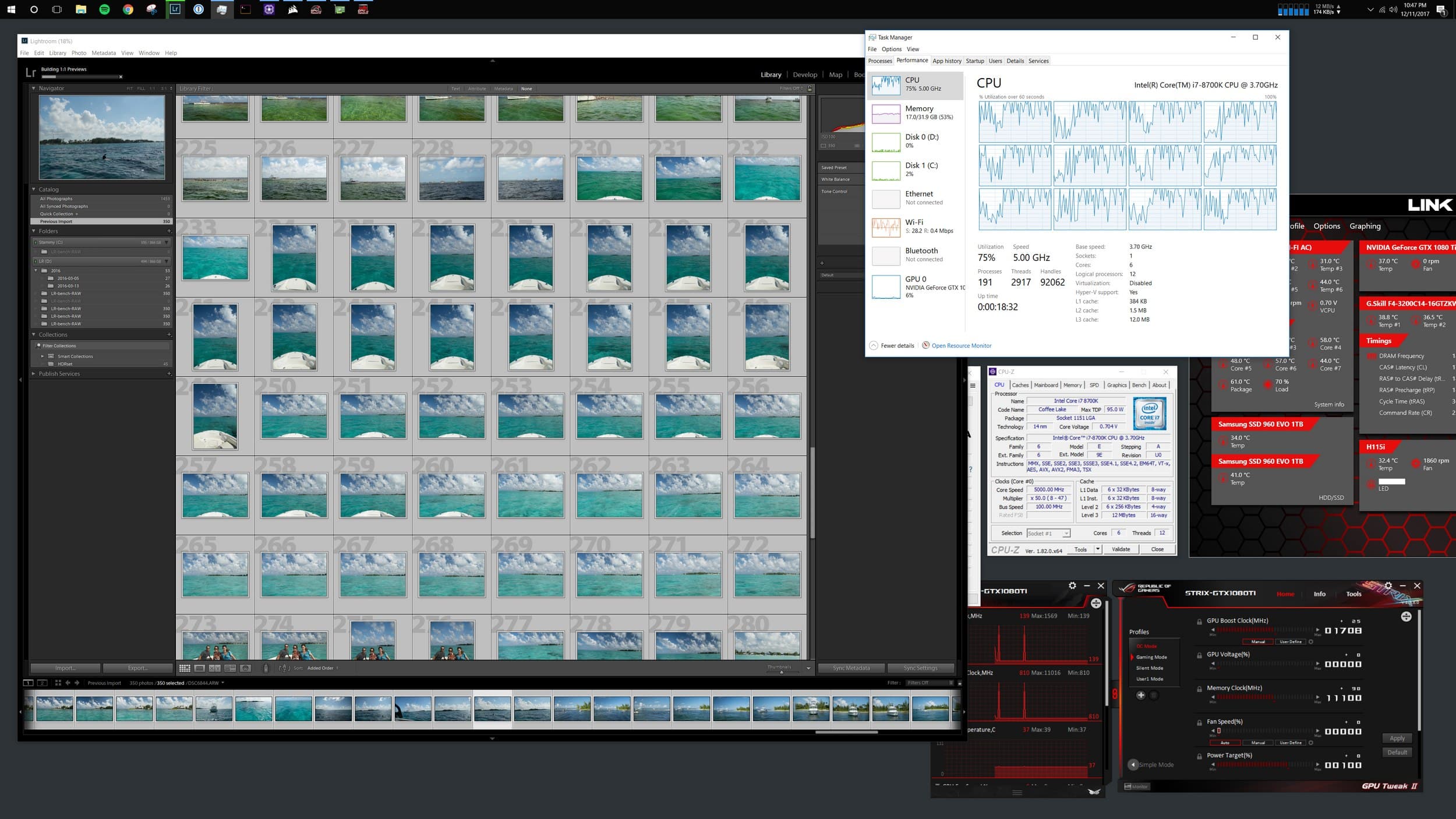

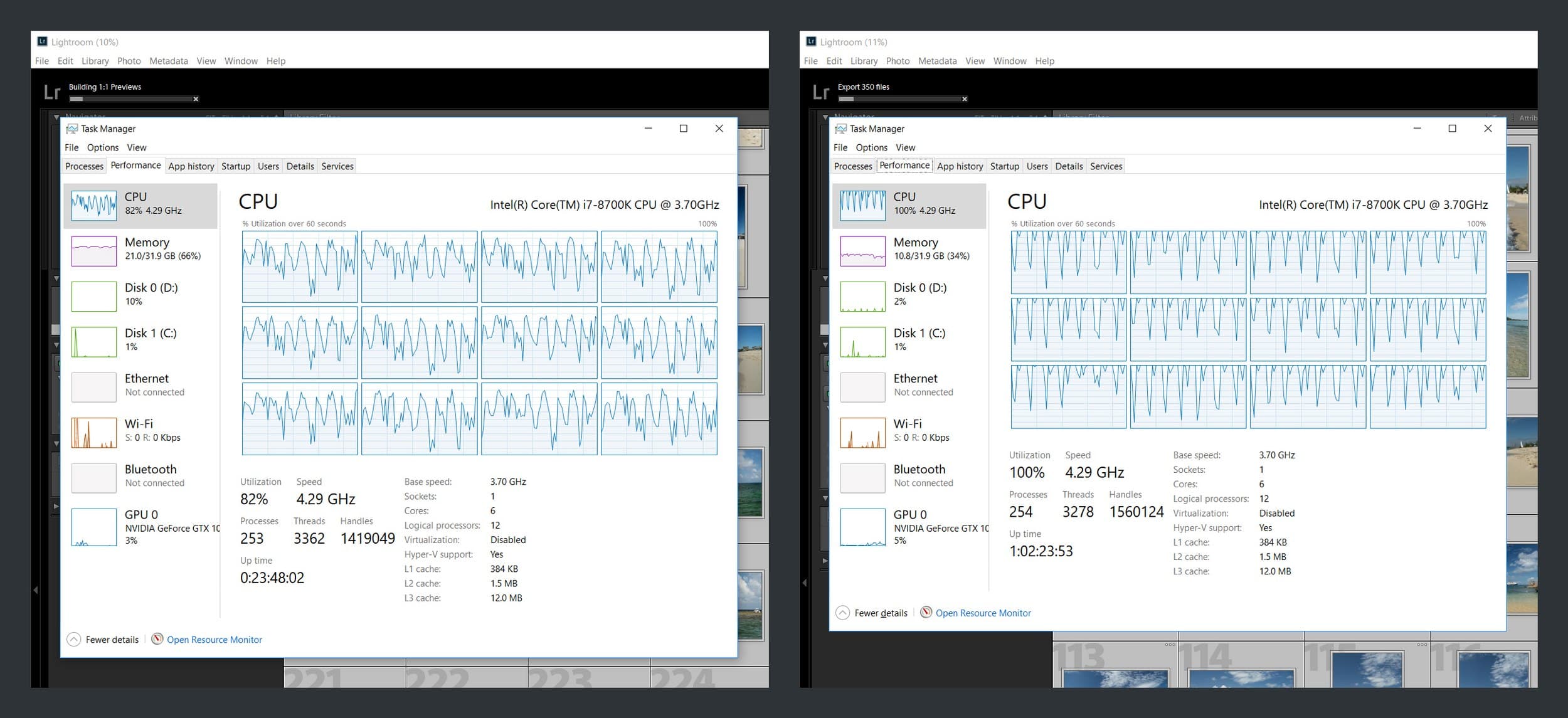

CPU

Adobe's recent upgrade to Lightroom Classic CC brought some performance improvements, largely related to increased multi-core performance for generating previews. Overall though, Lightroom does not make the best use of many CPU cores. The Develop module can to a degree but the performance makes it obvious that it's not terribly efficient. This is a theme with all of Lightroom in regards to performance: it could always be better.

For my needs Lightroom loves the highest clock speed it can get, as opposed to a ton of lower clocked cores.

Having more cores in Lightroom can help you if you care more about exporting images and generating previews. That is not something I care about as it happens so infrequently compared to me fiddling with sliders in Develop. Otherwise, you're better off with fewer cores with a very high clock speed. This will help with:

- Scrolling through photos in the Develop module

- Performance and responsiveness for adjustments in Develop

- Converting images to DNG

- Merging HDR images and stitching panoramas

If you shoot a large amount of photos and hate waiting for images to export or previews to generate, then a higher core count CPU like the Core i7 7820X 8 Core, Intel Core i9 7900X 10 Core, or even the Core i9 7940X 14 Core may be a great choice depending on your budget. You certainly give up general editing performance as you get into the higher core counts, but a 30-40% reduction in the time it takes to export and generate previews can be a massive time saver. However, if this isn't a major consideration and you just want the smoothest editing experience possible, then the Intel Core i7 8700K is still our go-to recommendation for Lightroom.

What about video editing?

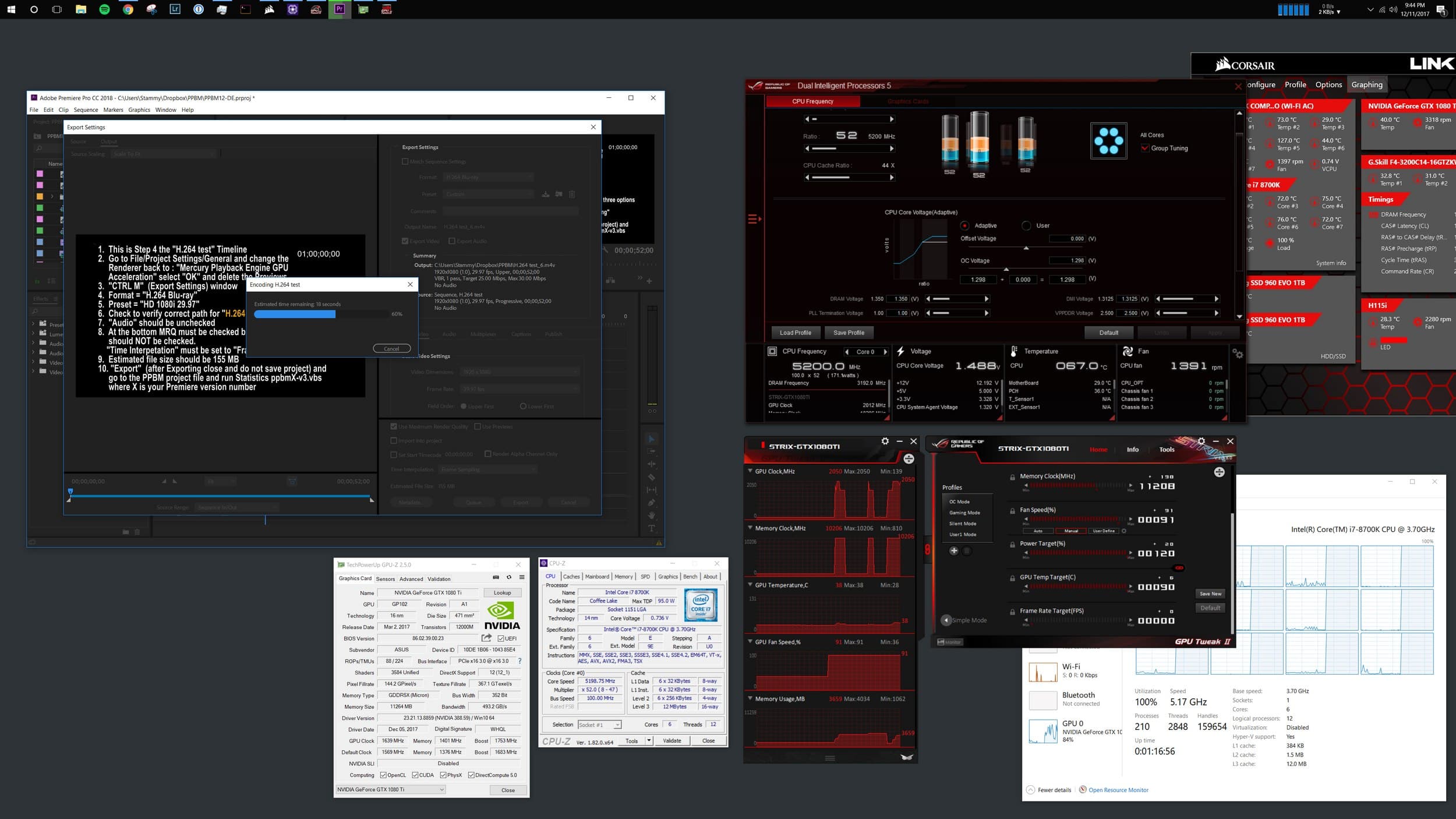

Premiere Pro is great at using multiple cores and a beefy GPU

I wouldn't consider myself anywhere near savvy in any video editing apps, but I have been shooting more and more video footage on my trips. I've gone from making edits in iMovie to Final Cut Pro X then After Effects and finally to using Premiere Pro. A good chunk of my footage is now captured in 4K. Better Premiere Pro performance is a nice to have for me but not a priority compared to Lightroom performance.

On the completely opposite end of the spectrum compared to Lightroom, Adobe Premiere Pro has much, much better multicore efficiency. It also seems to put a powerful graphics card to good use and will most definitely make use of as much RAM you can give it.

My common tasks in Premiere Pro — rendering previews, warp stabilization, Lumetri color adjustments and exporting — all take advantage of additional processor cores. And the faster the clock of each core, the better.

Why build a PC?

Building a PC is easier than ever today

I'm no stranger to building a computer from scratch; I have built dozens by now8. However, quite a few things have changed from the last time I built a computer.

These things are now fairly commonplace and welcomed additions to the building process:

-

AIO (all-in-one) liquid cooling systems Back in the day if you wanted a high-performance and relatively quiet cooling option for your overclocked processor, you would have to source a radiator, fans, pump, reservoir, tubing, CPU/GPU/Northbridge waterblock and assemble it yourself. You'd have to fill it up, put some anti-algae chemicals in, get all the bubbles out, do hours of leak testing and then change the liquid coolant out every 6 months or so. It was a huge hassle.

Now you can just buy an all-in-one system that comes with everything you need in a simple closed loop. Most AIO units these days even have a USB connection and some desktop software to help monitor and automatically ramp up the fans and pump depending on CPU or GPU activity.

-

Fully-modular PSUs Why should a computer with a million internal drives and accessories have the same number of power supply cables as a basic setup with just one SSD? There's no need to have 20 extra power cables taking up space in your case if you don't need them.

Modular power supplies let you connect only the cables you need, reducing clutter from your case. And most offer attractive sleeving styles to boot. Just don't ever mix and match cables from other PSUs — there is no standard pin layout for the connections on the PSU and rookie PC builders often burn their PC by accidentally keeping old cables in there when switching to another modular PSU.

-

Operating systems are sold on USB sticks now. No longer do you need to buy a cheap optical drive just to install the operating system and never use it again. Windows 10 is now sold on a tiny USB stick.

-

Cases with no 3.5" and 5.25" drive bays! And on that note, why should you have a case with unsightly internal and external drive bays you may never use? Case manufacturers have started offering cases entirely devoid of 5.25" optical drive bays as well as 3.5" racks, or they have removable racks.

-

M.2 NVMe SSDs: Probably the biggest innovation for me personally. SSDs in a small PCIe stick that offer a tremendous performance advantage over even regular 2.5" SSDs. Something like 3-5x faster.

-

Case windows use real glass now. Modern high-end computer cases actually use real glass instead of scratch-prone and flimsy lucite or plexiglass. I still think windows in computer cases are kind of silly though.

-

Wireless mice are actually good now. Okay, I'm really dating myself here but for the longest time wireless mice were laggy. Noticeably laggy cursor. Impossible to use for even the most basic gaming and they came with horrible battery life. I have been using wired Logitech mice for about a decade... but I recently switched to a Logitech MX Master 2S. Wireless mice with great battery life and nice customizability are finally here.

In the past you sort of had to wing it when it came to picking parts for your build. You would have to read a bunch of reviews for motherboards, graphics cards, RAM and so on. Then you might need to actively participate in a computer forum to see what folks were running, if there were any compatibility concerns, order everything and then hope everything worked as expected.

PCPartPicker.com is one relatively new resource that I have found to be invaluable. People share their build lists, photos and more there. It helped me answer very specific questions on numerous occasions:

- Does this graphics card fit in this case?

- Will this watercooler fit?

- What does this case look like with these parts?

- What is the smallest case that will fit this motherboard?

Chances are someone out there has built a machine identical to what you want to build and you can just look up pictures of that rig.

In addition, I've found a few other handy resources while building: the active Reddit r/buildapc and some popular YouTube channels like Bitwit, Linus Tech Tips and JayzTwoCents.

Though I would be remiss of me if I did not mention why it may not be a great time to build such a PC: RAM prices and graphics card prices have skyrocketed in the last year. The latter mainly due to insane high-end graphics card demand from cryptocurrency mining.

The case

Finding a good case will never be easy

Unfortunately, one thing has not become easier over time. Finding an attractive, understated and simple case. Case manufacturers seem to only cater to the gamer stereotype of excess and gaudy.

I'm in my 30s, I'm a designer... I want something simple but that doesn't mean I don't want to have the best hardware, support for large water-cooling radiators and expected case ammenities like thumb-screws, anti-vibration features and other noise considerations. I could care less about LED fans, weird intake designs and other questionable aesthetic choices.

In the past I tended to like small form factor computers, having made quite a few Shuttle SFF computers. So I started there, thinking I could get a micro-ATX motherboard. This would prove to be a challenge given my desire to have a long full-size high-end graphics card.

There were a few that were somewhat close to what I was looking for like the Define Mini C and Corsair Air 240, at least size-wise. Then I found a Kickstarter for a ridiculously small case called the DAN Cases A4-SFX that used a micro-ITX motherboard and could house a full-size graphics card. It was dubbed the "world's smallest gaming tower" at roughly the size of a shoebox. Sure, it had some tradeoffs (non-ATX PSU, limited motherboard selection, limited heatsink-fan options and not the best cooling in general) but it seemed perfect.

Unfortunately, it was sold out everywhere. A second version eventually launched on Kickstarter and I ordered it. Though it won't arrive for a while. Maybe I'll use that for a separate build later on.

The more I thought about it, I wanted a case large enough for me to pick a motherboard with great overclocking capabilities, the ability to have a water-cooling setup and the room to expand to two graphics cards via SLI if I so decided in the future9.

The searching continued for a case that could accomodate an ATX motherboard as well as a large 280mm radiator. I'll spare you the details, but after looking at a bunch of cases (ones from NZXT, Define, Corsair and Lian Li mainly), I landed on the NZXT S340 Elite VR. NZXT also has a newer model called the H700i that seems interesting and has a bit better internal cable management but I'm not a fan of some of the perforated panels it has on the top.

Shot these photos myself! Got a studio lighting setup just for this article.

The parts

What parts I chose and why

I first built this computer in April 2017 with a quad-core i7 7700K and Z270 chipset motherboard. But later that year Intel released six-core i7 8700K processor and Z370 chipset. I ended up upgrading both the CPU and motherboard at that time.

Processor

Intel Core i7 8700K

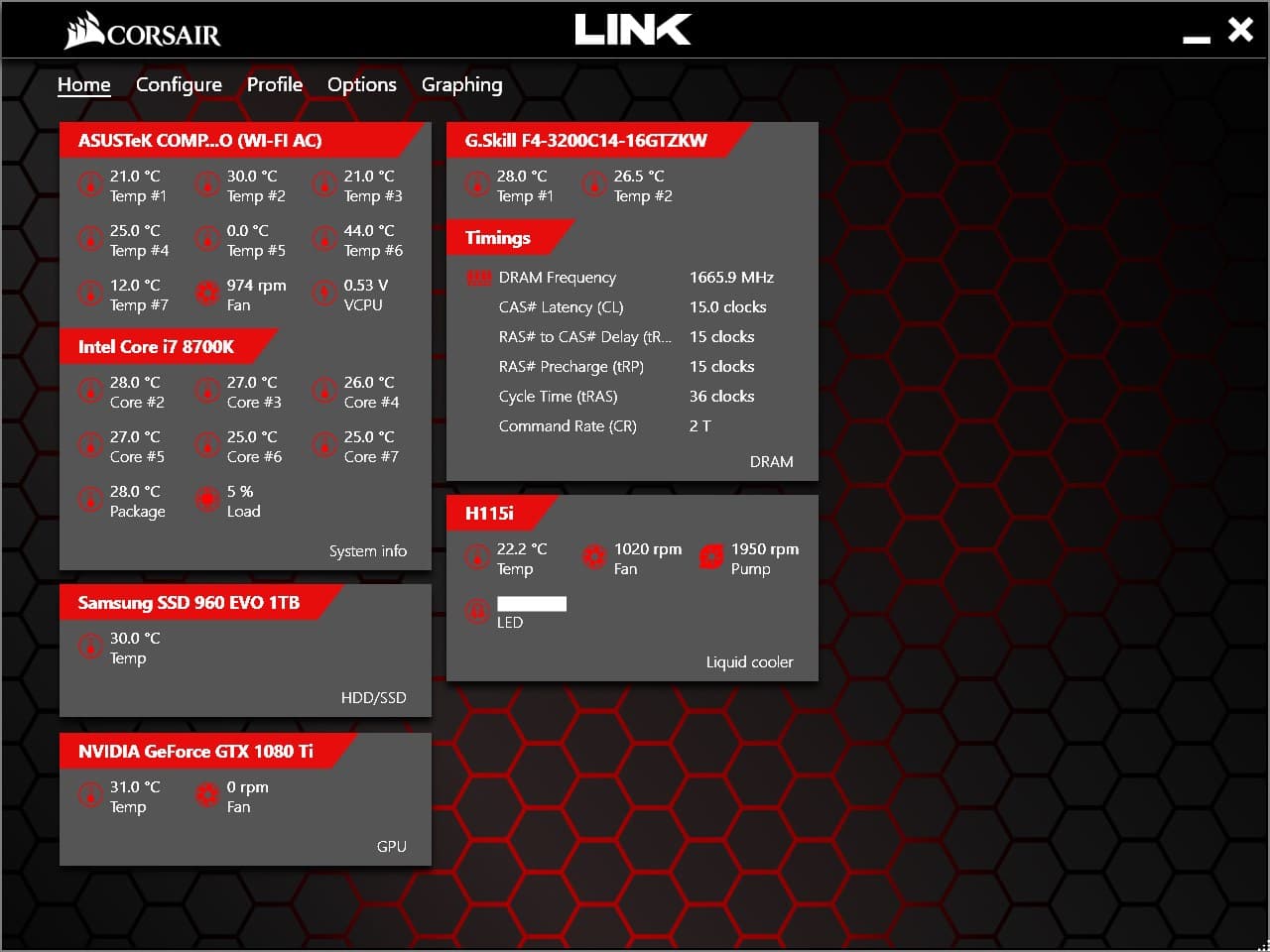

I went with the hexa-core Coffee Lake Intel Core i7 8700K processor running at 3.7GHz (4.7GHz with Turbo Boost). At the time I built this computer, the 8700K was the best processor for gaming as well as performing well in Lightroom compared to other chips. It's not the best for Adobe Premiere Pro but it's better than a 7700K with fewer cores.

Why not go for more than 6 cores?

There are processors with more cores — from both Intel and AMD — but I don't think they would be better for what's important to me: Lightroom and gaming. Two uses that traditionally prefer higher clocks and don't make good use of too many cores. Typically when you get a processor with more cores, the lower the clock speed per core.

For example, each core in the ridiculous $2,000 18-core Intel Core i9 7980XE has a mere base clock speed of 2.6GHz but with a Turbo Boost (v3.0) up to 4.4GHz. There's some extra clarification to be made here: Turbo Boost does not mean every core gets that speed. In this example, only two cores get 4.4GHz. If there was some magical processor that had a ton of cores where each core had a very high clock speed as well, then the case may be different.

This does also apply to the 8700K. While the Turbo Boost is listed at 4.7GHz that's only for one core. If the computer decides two cores should be boosted, then they are each at 4.6GHz. That continues down to all cores running at 4.3GHz with Turbo Boost. Compare to the 7700K that has a Turbo Boost of 4.5GHz for one core and 4.4GHz for all four cores. So yes, the comparable all core Turbo Boost speed of the 8700K is slightly slower than the 7700K chip.

Then why did I get the 8700K if that's the case? Because I'm going to overclock the heck out of all cores on the 8700K — and a bit of a spoiler but I got a good chip and was able to overclock higher with the 7700K.10 And as a nice secondary benefit the extra two cores means I have better performance for applications that make good use of multiple cores and many threads, like Premiere Pro.

I would try to explain more of the current landscape of Intel processors... but it would take way too long to even begin to explain and it doesn't really matter.11

CPU cooling

Corsair Hydro H115i AIO liquid cooler

As I mentioned above, all-in-one liquid cooling options are affordable and highly performant alternatives to creating your own water-cooling loop, not to mention the related hassle and maintenence. These systems are easy to use, as long as your case is large enough to support the radiator size you want.

I picked for one of the larger ones: the Corsair H115i has dual 140mm fans for its 280mm radiator. Corsair recently released a newer version called the H115i PRO RGB but the main differences seem to be a pump head with RGB LED lights and mag lev fans that aim to be quieter (which I will probably order separately and swap out my current fans).

While I got the largest and easiest to use Corsair 280mm AIO system, there are a few other options if you're feeling more adventurous and wish to build a custom loop or have a larger case and want something more performant. If you plan to watercool your graphics card and don't want to have to deal with placing a second radiator from an AIO kit, a custom loop is a good way to go.

-

EKWB S280 kit Higher-end custom kit

-

NZXT Kraken X62 AIO 280mm Great Corsair H115i alternative

-

Corsair H150i PRO RGB Larger 360mm kit

Motherboard

ASUS ROG Maximus X Hero

Aside from the basic need that it support LGA1151 processors and used the Intel Z370 chipset, I had a few requirements when I began searching for the motherboard:

-

Onboard 802.11ac Wi-Fi: Because I really don't want to have to get an additional card to add Wi-Fi capability.

-

Two M.2 slots: I didn't want to go the route of a traditional SATA SSD with this build and wanted to go with a tiny and speedy M.2 card slot SSD. As for why I wanted two — more on that in the section below.

-

First-class overclocking support: There are a few things I like to see in a board I plan to overclock with, even a bit:

- Large built-in heatsinks over vital parts of the chipset, especially the power management components above and to the left of the CPU socket.

- An easy way to reset or diagnose why the computer doesn't boot (external rear restart buttons are nice, as well as an onboard display to indicate error codes).

- A solid UEFI BIOS that lets me control everything related to overclocking. While modern motherboards also have companion Windows software to let you control this on the fly, it's nice to have more control in the UEFI BIOS itself.

-

Aesthetics: Definitely further down on the list of wants, but I'd like something fairly discrete without a ton of bright red RAM and PCIe slots. Nowadays everything (motherboards, graphics cards...) has a ton of LEDs on it but fortunately they are all customizable so I can turn them off.

-

Full ATX form factor: Adequately spaced PCIe slots to accommodate large graphics cards with non-standard height coolers and future expansion cards I may plug in.

-

Strong, reinforced PCIe slot: Graphics cards are so heavy these days with massive coolers that I'm always worried I'm going to damage the PCIe slot with all the weight. While I plan on getting a graphics card brace to help with this, it would definitely be nice to have if the motherboard had a stronger PCIe "SafeSlot" as Asus calls theirs.

This may sound like a laundry list but if we're talking about fairly high-end boards, there are a lot that meet these needs. I ended up going with one of Asus' many Z370 options. In fact, the one I went with was the lowest end model of this high-end ASUS ROG Maximus line that caters to the gaming and overclocking crowd.12

If you're looking for something with similar functionality but a few less boxes checked, any Z370 motherboard from the enthusiast Asus ROG Strix line below this Maximus line would be a solid choice.

Graphics

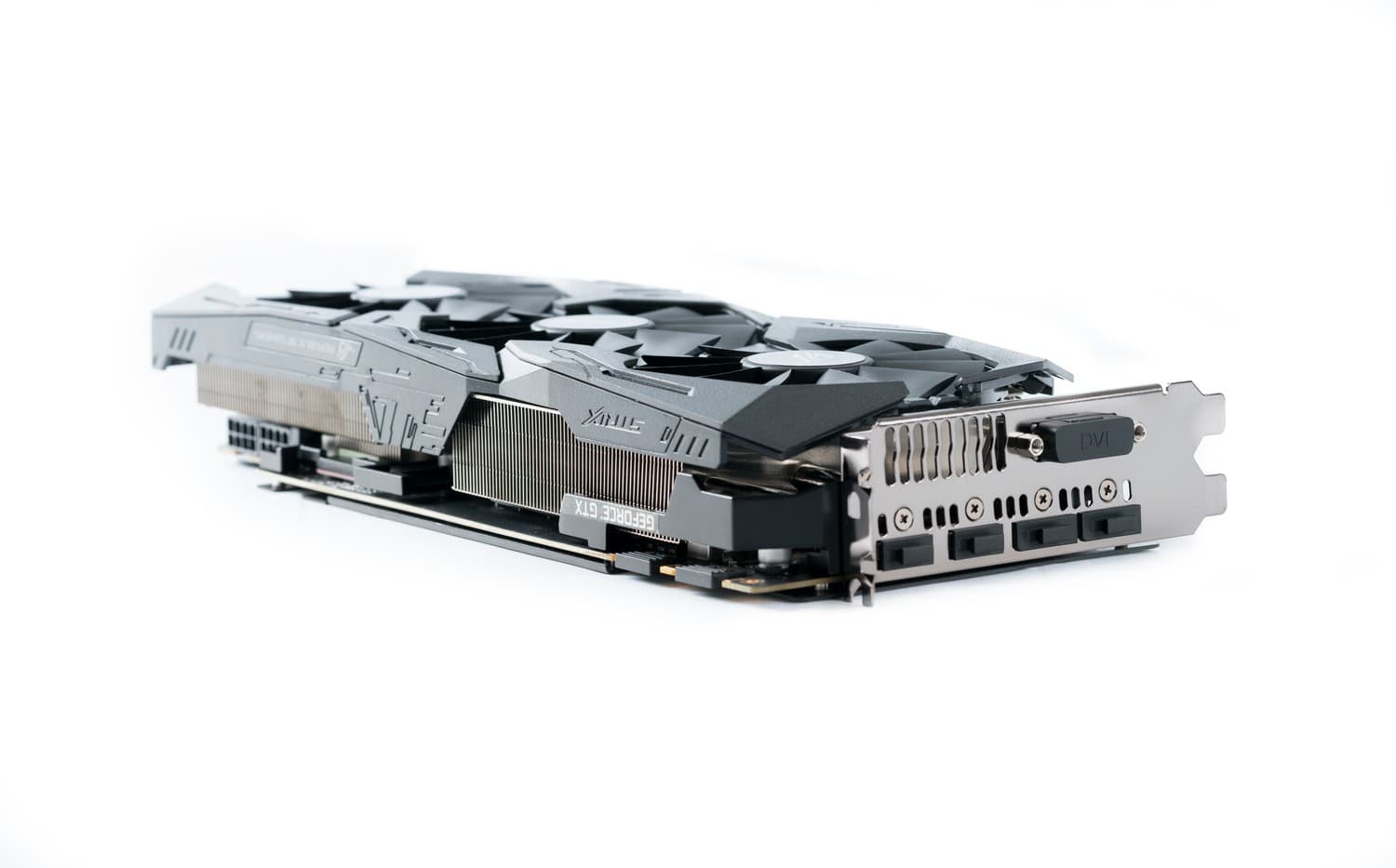

ASUS ROG STRIX Nvidia GeForce GTX 1080 Ti

For the graphics card, there was really no beating the just-released (at the time) Nvidia GTX 1080 Ti. The much more expensive $1,200 Titan Xp came out shortly after but had marginally better performance (around 5-7%) — gains that could mostly be achieved by mildly overclocking the GTX 1080 Ti. And as I had mentioned earlier, Lightroom and other Adobe applications I use frequently are more optimized for Nvidia cards at this time.13

You might be thinking.. holy crap, $750+ for a graphics card!?! That's almost double the price of the CPU.

First off, this card gets you solid VR and 4K-and-beyond gaming, and should continue doing its job well into the next generation of VR gear. With a clock speed nearing 1.6GHz, 11GB of GDDR5X VRAM, 3,584 CUDA cores, 11.3 teraflops and a whopping thermal dissipation of around 250W (more than double the i7 8700K CPU's TDP), the GTX 1080 Ti is a beast. If you care more about the details, this Anandtech review should be more than enough.14

High-end graphics cards have matured significantly since the last time I purchased one for a build. With their ridiculous number of cores excelling at highly parallelizable tasks, modern graphics cards have also found a life beyong gaming with the rise of general-purpose computing tasks on GPUs (GPGPU) like cryptocurrency mining.15

However, knowing I wanted the GTX 1080 Ti wasn't enough. I had to pick out which of the many different models I wanted. I knew I wanted to avoid the standard "blower" type reference design cards. The single-fan blower style cards tend to be fairly loud and lack some ideal thermal characteristics that I'd want for such a card, especially one I will overclock. I ended up with this triple fan ASUS model that also featured a huge heatsink requiring a 2.5-slot height. There are way more options for GTX 1080 Ti cards at the time of publishing compared to when I started building this PC; for the most part look for anything with a huge cooler and you should be good.

There's one caveat though. The GeForce line of graphics cards don't output 10-bit color graphics (40-bit RGBA) to a 10-bit monitor unless you're in a DirectX 11 fullscreen mode, which is basically only for gaming. It seems that Nvidia blocks their consumer line of cards from outputting 10-bit color for professional applications and prefers that you buy a card from their much more expensive Quadro line (the top Quadro cards range in price from $2,000 to $7,500!).

Why get only one card?

I initially considered going for a dual-card SLI setup. After some research I discovered two things:

- Lightroom has no support for a dual card SLI setup.

- Not many PC games even have SLI support now.

As such, it doesn't seem worth pursuing a dual-card setup. I can already game at 4K 60fps no problem with this single GTX 1080 Ti. However, I expect the need for and adoption of SLI support from developers to change as the need for even more performance grows with future high-resolution, 240Hz DisplayPort 1.X+ monitors and VR HMDs.

-

ASUS NVIDIA GTX 1060 Budget Performance

-

EVGA NVIDIA GTX 1080 Ti Hydro Copper Waterblock Tuner's choice

-

NVIDIA TITAN Xp Higher end

-

NVIDIA TITAN V Highest end

Storage

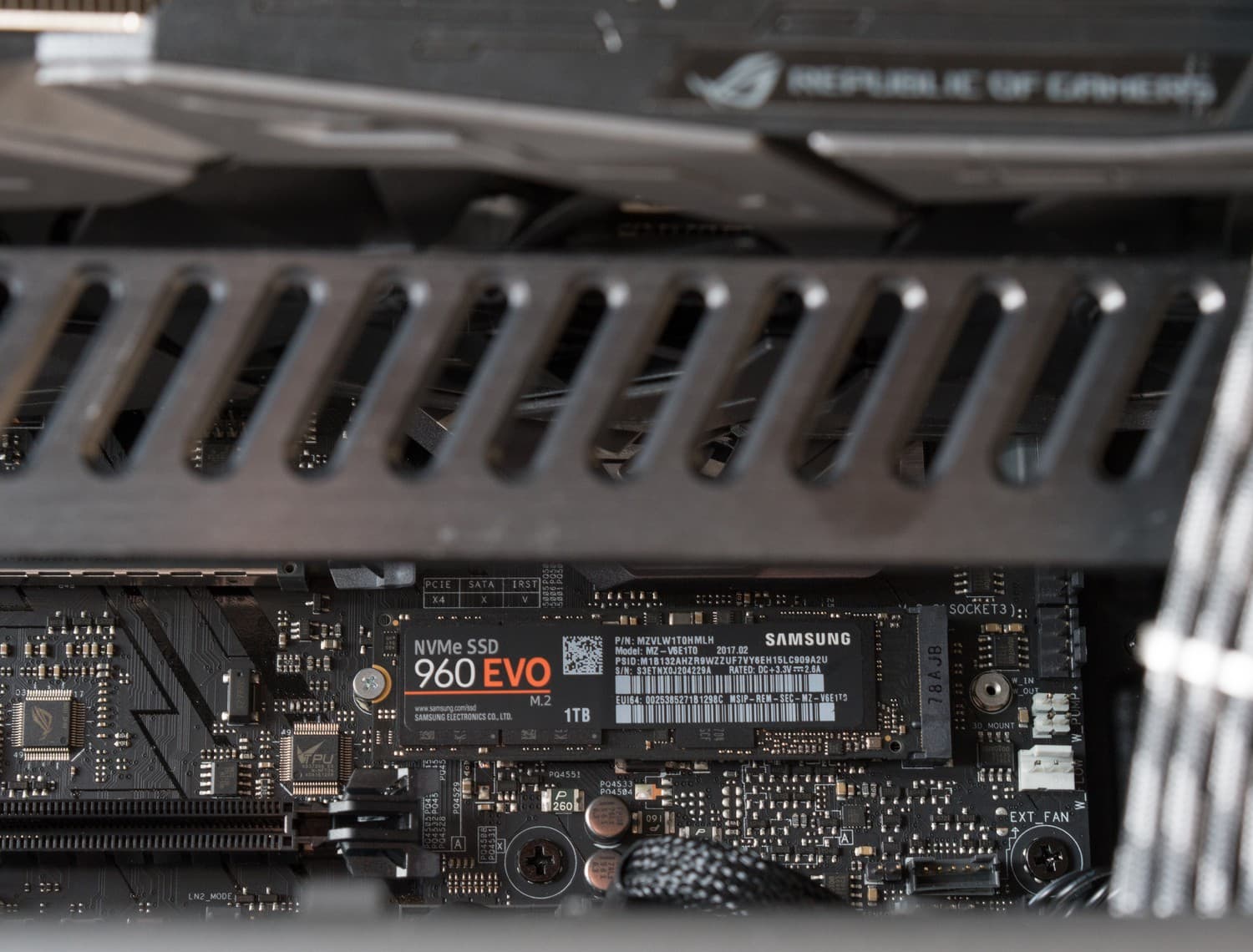

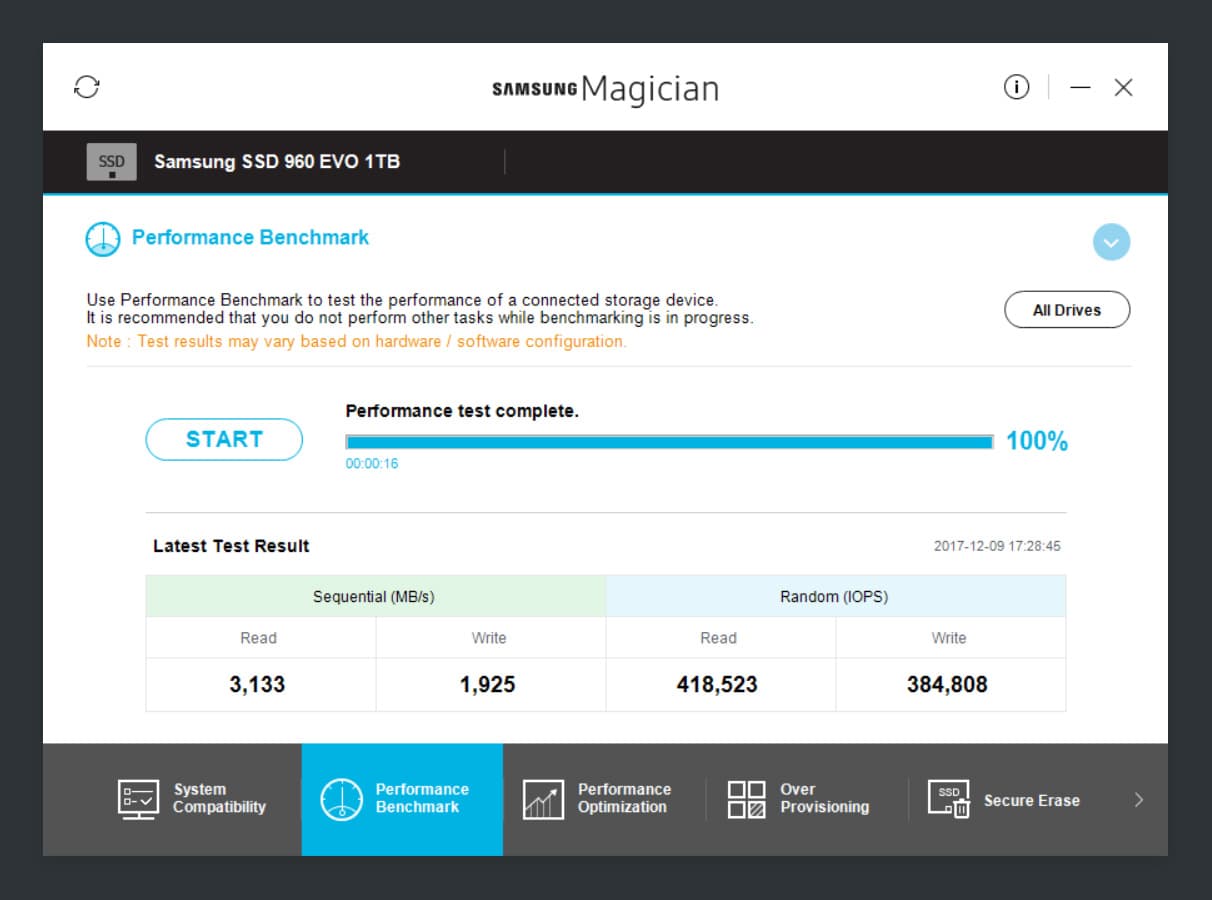

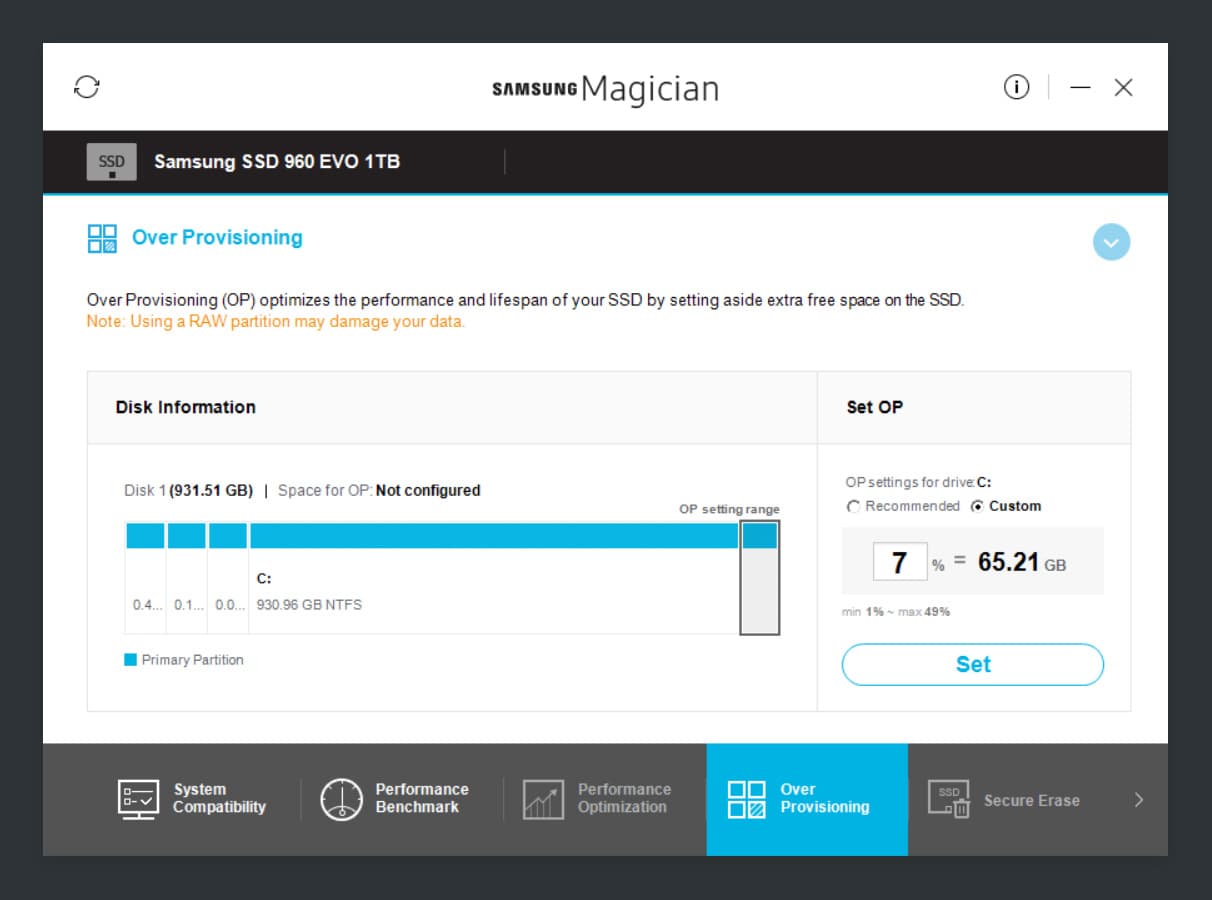

Dual 1TB Samsung 960 EVO M.2 SSDs

I had heard so much about the crazy performance of these tiny new PCIe NVMe M.2 SSDs that I had to try one. Some of the latest high-end M.2 SSDs boast speeds more than 3-5x faster compared to their SATA counterparts.

960 EVO at home in its M.2 card slot.

But first.. what the heck does PCIe NVMe M.2 mean?

-

PCIe: The high-speed serial expansion bus that connects to a bunch of peripherals like graphics cards and some types of storage (not SATA). You might have heard about a CPU/chipset supporting a particular number of PCIe lanes. That roughly refers to how much bandwidth (each lane equates to 4 physical wires — two to send, two to receive) a particular device may require. For example, a modern graphics card usually wants a PCIe x16 slot to get 16 lanes for more bandwidth while current M.2 SSDs only require 4 lanes.

-

NVMe: Short for NVM Express (which is short for something even longer), NVMe is just a specification for interfacing with non-volatile storage attached via PCIe. It's like an API for these new SSDs. Previously, PCIe-attached SSDs had their own custom way to talk to the chipset and that lead to requiring custom drivers. NVMe is now the standard and was designed with SSDs in mind, compared to the precursor protocol AHCI that was made with spinning disks in mind.

-

M.2: And this is simply the name of the connector for the expansion card itself. You might see them called M.2 2242 or 2280. That refers to the length of the card: 22mm wide and either 42mm or 80mm long.

When I began researching M.2 SSDs for this build, there seemed to be only two options when it came to no-holds-barred performance: the Samsung 960 EVO and the Samsung 960 PRO. The EVO model uses TLC V-NAND with some smart uses of two kinds of SLC caches to increase performance. The PRO model on the other hand uses superior MLC V-NAND flash memory.16

Despite the difference in NAND types used between the 960 PRO and 960 EVO, the performance isn't too dissimilar (likely thanks to the EVO's great use of SLC caching):

- 960 PRO — 3,500 MB/s seq. read, 2,100 MB/s seq. write

- 960 EVO — 3,200 MB/s seq. read, 1,900 MB/s seq. write

I went with the 960 EVO to save a bit of money given that I probably would not be able to tell the difference between those two in terms of speed. I was not concerned with lifespan as I would likely upgrade long before I saw any diminishing performance.

I got two 1TB EVO 960's. I started this build thinking I would have a dual-boot Windows 10 and macOS hackintosh machine. I ended up deciding against that for a variety of reasons, including having to limit my initial hardware choices to only things that would be friendly for a hackintosh setup. Then I thought maybe I would just RAID 0 the two SSDs but decided against that (more on that later). I just ended up making one a dedicated scratch disk for Lightroom to store photos I'm currently working on. It feels safer that way in case I do something that somehow nukes my main OS drive (though I always have the photos backed up to the NAS and Backblaze so it wouldn't matter much).

A note about 3D XPoint: Intel has a promising new type of memory technology called 3D XPoint memory that they have started selling under their new Optane SSD brand. It's really expensive for the time being but very fast and something to keep an eye on in the future.

Uh, this is a Lightroom PC and you only have 2TB of storage??

If I didn't have my 12TB Synology NAS to archive photos I was done editing, I would have opted to also get large internal mechanical hard drive like this Western Digital Black series drive if I was only going to use it alone. If I was going to use it in a RAID array, I'd get several of the WD Red Pro or Seagate IronWolf Pro drives (they have NAS/RAID specific features like TLER).

-

Samsung 850 PRO Great SATA SSD

-

Corsair Neutron XTi Fastest SATA SSD

-

Intel SSD 600p Series Cheaper M.2 SSD

-

Intel Optane SSD 900P Highest end 3D XPoint SSD

-

WD Black 6TB HD Performance Hard Drive

RAM

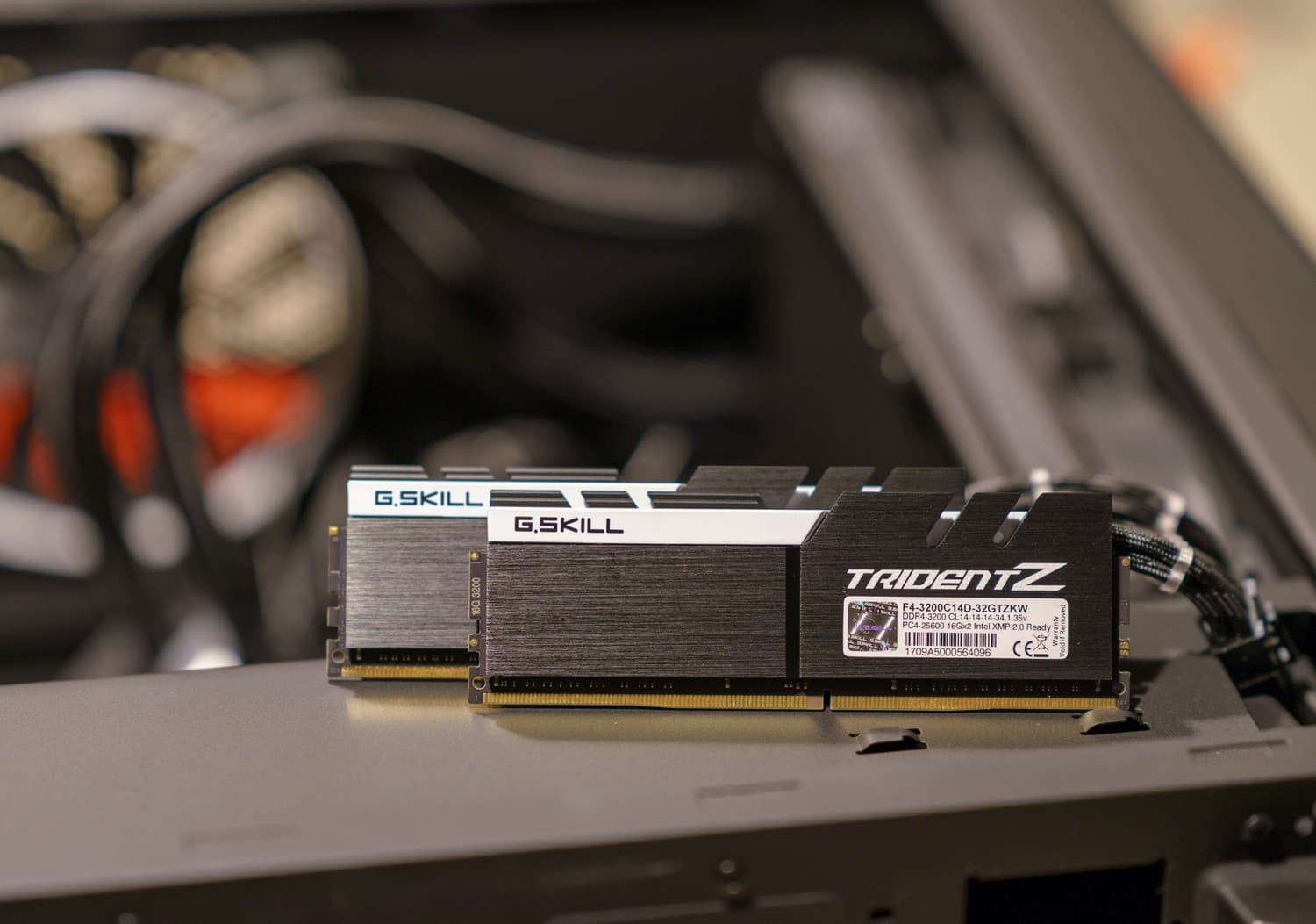

2 x 16GB G.SKILL Trident Z DDR4-3200 CL14

I feel like RAM is an often overlooked piece of vital computer hardware for all but the more experienced computer enthusiasts. When it comes to RAM it's not just about picking enough so that your applications have enough room to play and don't need to unncessarily keep paging to your SSD.

You at least need good enough RAM so you don't have stability issues. Bad RAM can lead to a myriad of stability issues and odd computer behavior. I will just reiterate that this is not an area you want to cheap out on. Unfortunately, RAM prices are so high these days17 it's actually pretty hard to "cheap out" in this space.

I began by looking for only 2 sticks of RAM instead of 4 for a few reasons. First, I plan to overclock a bit so I wanted to only use 2 sticks to reduce strain on the integrated memory controller. Second, the Z370 chipset on this motherboard only supports dual channel so there would be no performance benefit going with 4 sticks. Not to mention the extra heat created with 4 sticks crammed right next to each other.

I wanted two low latency matched 16GB ram sticks for for a total of 32GB. While I definitely wouldn't mind having more RAM, 32GB is more than sufficient for my needs and performance is a higher concern for me. And well, it's really not possible to find really fast, low latency RAM in anything larger than 16GB sticks; even that is a challenge. The very high speed RAM kits tend to only come in 8GB sticks.

When it comes to RAM, there's a lot more to look at beyond just the number of gigabytes. Speed and latency play a very large and interconnected role.

Overclocked RAM can have a sizable performance improvement when it comes to frames per second while playing some CPU-bound games. The performance variance for general system tasks seems to be much less pronounced on an Intel machine: Intel machines are much less picky with RAM than AMD Ryzen machines.18

The Intel Core i7 8700K with a Z370 motherboard supports a Coffee Lake DDR4 reference speed of 2666MHz. However, even if you have DDR4-2666 or faster installed, you won't get this speed out of the box without any configuration. It will run at 2133MHz due to the base JEDEC DDR4 specification. Fortunately, all you have to do is enable a memory setting in the UEFI settings called XMP (Extreme Memory Profile) — this will automatically bring your memory up to their rated speed and memory timings, adding a bit more voltage if necessary.19

It's rather easy these days to overclock RAM on its own, separate from any CPU overclock. While the performance benefits on an Intel system outside of gaming probably don't make it worth your while to go overboard with extremely pricey RAM, just setting your RAM to its rated XMP settings can get you on your way quickly.

When I was overclocking long ago, the memory controller resided in the northbridge chip of the chipset and overclocking was frequently done by just increasing the front-side bus speed (in addition to the CPU multiplier if it was unlocked for the CPU) that links the processor and RAM, usually with a 1:1 memory divider if it would work. With modern Intel machines the memory controller resides inside the processor itself and it's much less common to overclock the base clock (BCLK) when RAM speed can be manipulated entirely on its own easily. Well that and because BCLK overclocking is tricky and can easily cause system-wide instability from RAM to devices on the PCIe bus.

Nerdy bits about RAM frequency and latency

When you shop for RAM, you typically see 3 things: size in GB, speed in MHz (like DDR4-3200 for 3200MHz) and finally latency or timings, typically shown with four numbers like 14-14-14-34. You may also see the latency listed as a CAS latency or CL value, that simply refers to the first and most important number for us of those four timing numbers.

In general, faster speeds and lower latencies are always better. But they are interconnected when it comes time to measure the absolute latency. Lets talk about what that means.

CAS latency (CL) does not represent a time value. Rather, it refers to the number of clock cycles it takes from the time the CPU (well, integrated memory controller inside the CPU to be more accurate) requests some data from the RAM to the time the RAM can supply that data back. For example, RAM with a CAS latency of 14 will take 14 clock cycles to return that data and CL16 RAM at the same speed would take 2 more cycles to get the same number of operations done.

So what is this clock cycle? The frequency at which the RAM operates is the number of operations per second the RAM can achieve. In the case of 3200MHz DDR4 RAM this is 3.2 billion cycles per second. A single cycle is the smallest unit of time a computer can recognize. There's one more wrinkle in this: we're talking about DDR, which stands for Double Data Rate. This kind of modern RAM processes 2 piece of data per cycle, so the DDR4-3200 we've been talking about is actually only clocked at 1600MHz but effectively operates at 3200MHz.

Now that we know how latency and frequency are related, we can begin to calculate absolute latency and see how it varies as RAM frequency increases.

Let's say we have DDR4-2666 RAM with a CAS latency of 14. First, we need to use the true data rate, which is half of 2666MHz. To get the absolute latency we plug it into this: 1/(data rate/2) * CAS latency. That would be 1/(1333MHz) * 14 = 10.5 nanoseconds to complete an operation requested by the CPU.

If you run a few different types of RAM through that equation, you can see the difference in absolute latencies. I only plotted a few RAM speeds and latencies in there, but it's possible to buy RAM at frequencies as high as DDR4-4266 CL17 (as far as I've seen).20

| 2400 | 2666 | 3000 | 3200 | 3333 | 3400 | 3600 | |

|---|---|---|---|---|---|---|---|

| CL12 | 10ns | No such RAM exists* | |||||

| CL13 | 10.83ns | 9.75ns | No such RAM exists* | ||||

| CL14 | 11.66ns | 10.5ns | 9.33ns | 8.75ns | No such RAM exists* | ||

| CL15 | 12.5ns | 11.25ns | 10ns | 9.38ns | 9ns | 8.82ns | 8.33ns |

| CL16 | 13.33ns | 12ns | 10.67ns | 10ns | 9.6ns | 9.41ns | 8.88ns |

| CL17 | 14.17ns | 12.75ns | 11.33ns | 10.63ns | 10.2ns | 10ns | 9.44ns |

(*While I wasn't able to find RAM for sale at those CAS latencies, it might be possible to increase voltage and overclock the RAM to achieve some of those lower latencies. For example, I ended up running my DDR4-3200 CL14 at 3333 CL14.)

As you can see here, having the lowest latency doesn't mean much when it's not referring to absolute latency, which takes into account the number of cycles happening per second. Makes sense — the faster it's going, the less time each individual cycle takes, so at some point much faster RAM can make up for slightly higher CL timings. Here's another example: DDR4-2400 CL12 has the same 10ns absolute latency as DDR4-3200 CL16.

Does this mean you're better off getting cheaper RAM and overclocking it to the desired speed? Well, not quite. First off, there is no guarantee that your cheaper 2400MHz RAM could actually reach an overclock like 3200MHz. It might 1) not be possible, 2) require extra voltage, or 3) only be possible with significantly loosened higher CL timings, which defeats the purpose. As such, it's a good idea to always get the lowest latency RAM you can find, even if you plan to run it overclocked with higher timings. Better to get CL14 RAM and run it at CL15 or CL16 when overclocked much higher, than get CL16 RAM at the same speed but only be able to get it to work overclocked at CL18 or higher.

For all those reasons above, I ended up going with 2x16GB DDR4-3200 CL14 RAM. It's among the highest frequency and lowest latency RAM you can find.21 This should give me solid headroom to overclock the RAM past 3200MHz and still have a low CL even if I have to loosen it up a bit. In addition, this G.SKILL RAM uses Samsung's B-die chips which are reputable for their performance and overclocking ability.

And as a minor point, I was looking for RAM that would feel more at home in my mostly black PC and wasn't some obnoxious bright color.

PSU

Corsair AX860

Back when I was just getting into building computers some 15+ years ago, power supplies felt like they were largely overlooked by the DIY computer building community. The thinking was something like: just get something that's 300 watts or so with a big, heavy heatsink and a fan that isn't too loud and you were probably good to go.

In reality, the power supply is one of the most important parts of a stable and performant rig. Cheaping out on the power supply can result in random computer stability issues and restarts. In a worst case scenario a bad PSU could damage or even kill some of your components. The criteria for picking a good power supply has become a bit more stringent with power hungry graphics cards (modern graphics cards can use much more power than the CPU) in the last few years.

In addition, overclocking is no longer some mystical dark art — motherboard manufacturers cater exclusively to this crowd with high quality capacitors, PWMs and VRMS along with UEFIs featuring comprehensive voltage and control settings for just about everything.

When it came time to pick my power supply, I looked at a few things in particular:

-

Quiet: These days it's easier to find a PSU with a larger single fan (120 to 140mm in size) instead of the louder dual 80mm fans you used to find in power supplies. However, some more advanced power supplies have what they call a zero RPM mode — they don't even need to spin the PSU fan(s) until the load reaches some percentage of total output. Even then the fan only speeds up incrementally as needed. As such, it might be worth getting a more powerful PSU than you need, just so you can stay closer to that zero RPM mode with your regular idle/light use load.

-

Efficient: Again, fairly common these days to find PSUs with the 80 Plus efficiency designation, of which there are now a bunch of tiers: bronze, silver, gold, platinum and titanium. The higher the efficiency of the PSU, the less power that turns into heat instead of becoming DC current for your computer. This usually also means less heat that the PSU needs to deal with and pump out of your case. For example, if you have a 1000W PSU with 80% efficiency, then your PSU will likely pull around 1250W from the wall outlet to generate the 1000W peak output. That'll cost you extra on your electricity bill compared to a PSU with a higher efficiency rating.

-

Fully modular: A fully modular PSU has fully detachable cables. So if you have only a few interal drives and peripherals, you just plug in the cables you need and don't have to worry about where to hide the unused cables inside your case. This makes cable management much, much easier. And also improves airflow from having fewer cables obstructing it. Just make sure you're getting a PSU with enough connections (and wattage) to support the number of devices you need to power inside your case.22

-

Sufficient wattage with a bit of headroom: The best way to figure out how much wattage your build will need is with a power supply calculator. You select your exact parts and it'll estimate max load wattage and provide a recommendation (about 10% more wattage). The nice thing about this calculator in particular is that it lets you estimate usage if you overclock your CPU and GPU as well. In my case, it said my rig would use close to 600W when overclocked a bit.

There's also the whole single vs multiple 12V rail discussion to be had if you're really curious.

After a bit of research I ended up going with the Corsair AX860. It has the zero RPM fan mode I was talking about, is fully modular and has an 80 Plus Platinum rating. Corsair also has AX860i model that has more functionality (there's a desktop app to control it) but there are some mixed reviews about fan issues and buggy software so I decided to avoid it.

At 860W this PSU is considerably more powerful PSU than I need right now. I opted for something like this to provide enough headroom for high CPU and GPU overclocks and to future proof myself a bit in case I ever decide to add a second graphics card or do something crazy like upgrade to an overclocked 10+ core processor with a much higher TDP. Had that not been the case I could have gone with a power supply in the 650-750W range.

-

Corsair RM750x Affordable Lower Wattage Modular PSU

-

Corsair AX760 High-end lower wattage Modular PSU

-

Corsair AX1500i 1500W OVERKILL

Keyboard & mouse

Apple Magic Keyboard, Logitech MX Master 2S & Evoluent VerticalMouse 4

I've more or less always used and loved Apple keyboards and the slim new Magic Keyboard is no exception. It's bluetooth and it's possible to configure it to work as expected with Windows 10. The new Magic Keyboard has keys with limited travel and some folks may not feel comfortable typing on it. You'll have to try it for yourself. One thing is for certain though: I absolutely hate loud clicky-style mechanical keyboards with long key travel. So no keyboards with Cherry MX mechanical switches for me.

As for the two mice, I often switch between a regular mouse and a vertical mouse to allay some RSI wrist pain from time to time. More detail on my Stuff I use page.

-

Logitech G603 LIGHTSPEED Affordable wireless gaming mouse

-

Razer Lancehead High-end wireless gaming mouse

-

Microsoft Modern Keyboard with Fingerprint ID Microsoft Keyboard

Speakers

Bose SoundLink Mini II

This one probably seems the most out of place compared to everything else on this list. Yes, it's a tiny portable speaker that I'm using for my desktop computer. I just didn't want a large multiple speaker setup taking up space on my desk, especially one requiring some bulky power adapter and multiple cables.

It's only for light use like watching videos on the web, basic Spotify background music or casual gaming. — I have a much larger and powerful Sonos system for when I really want to play music. And as for sound while gaming, that's not a priority for me. I make due with just this or plugging in headphones.

There's a newer model of the SoundLink but it's not directional and didn't seem like what I wanted. The SoundLink Mini II is tiny but packs a good punch and can be powered via micro-USB and connect via bluetooth or a standard 3.5mm audio aux cable. I have a micro-USB cable hidden under a cable management shelf under my desk that I can pull out whenever I need to charge this or my Logitech MX Master 2S mouse.

-

Mackie CR3 reference monitors Affordable desktop monitors

-

Audioengine HD3 High-end bluetooth desktop monitors

-

Audioengine A5+ Larger bookshelfs

-

Razer Nommo Pro THX certified Gamer's choice

Operating System

Microsoft Windows 10 Home, USB flash drive

Nothing much to say here, Windows 10 Home. I have little use for any of the features included in Windows 10 Pro.

Parts list

-

Intel Core i7 8700K — $399

CPU -

Corsair Hydro H115i AIO liquid CPU cooler — $139

CPU cooler -

Asus ROG STRIX GTX 1080 Ti — $779

Graphics card -

ASUS ROG Maximus X Hero (w/ Wi-Fi) — $279

Motherboard -

2x16GB G.SKILL Trident Z DDR4-3200 CL14 — $322

RAM -

2x1TB Samsung 960 EVO M.2 SSDs — 2x $479

Storage -

Corsair AX860 — $169

PSU -

NZXT S340VR Elite (matte black) — $117

Case -

Dell Ultrasharp 27" UP2718Q — $1499

Display

Miscellaneous

-

Humanscale M8 — $399

VESA arm -

Bose SoundLink Mini II — $179

Speaker -

Logitech MX Master 2S — $90

Mouse -

Apple Magic Keyboard — $99

Keyboard -

Arctic Silver 5 — $14

Thermal compound -

NZXT Internal USB Hub — $25

Case USB hub -

Corsair AF120 Quiet Edition fan — $15

Case fans -

Corsair AF140 Quiet Edition fan — $18

Case fans -

MnpcTech GTX 1080 Ti support bracket — $49

Graphics card brace -

Custom Corsair paracord sleeved cables — $144

Custom PSU cables -

Windows 10 Home USB stick — $119

Operating system -

Humanscale NeatTech Mini — $99

Cable management tray -

1/2-inch self-closing sleeving — $12

External cable sleeving -

25 velcro cable ties — $8

Cable ties -

SUBTOTAL — $5,931

The display

Dell UP2718Q 27" 4K display

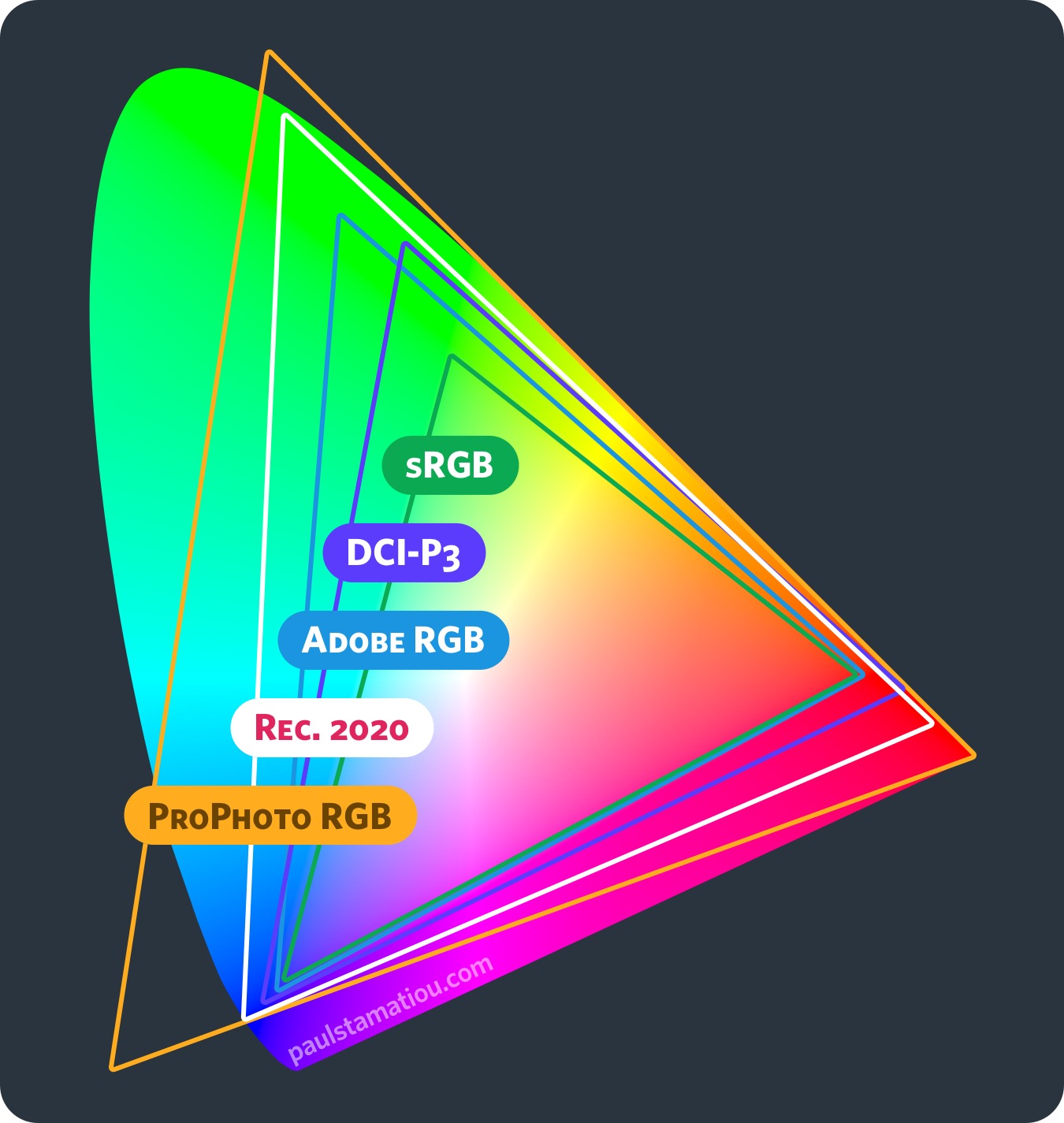

I was definitely spoiled coming from a 5K iMac. It has a stellar display with great color accuracy and incredible brightness. They even tossed out the industry's way of advertising monitors based on sRGB or Adobe RGB color space accuracy and have instead slightly adapted the DCI-P3 color space, originally meant for projectors, to be used for device displays and called it Display P3. Apple is seemingly ahead of the game here (well, it's debated if P3 is good move for consumers).

I had a daunting challenge ahead of me if I was to find a quality replacement for that display.

I'm not a professional photographer. I'm merely a hobbyist.

That means I don't get paid to take photos or do anything where color accuracy is mission critical, such as shooting and post-processing portraits with accurate skin tones or working with print. That means I don't care quite enough to use a pricey 10-bit Nvidia Quadro graphics card paired to a 10-bit professional monitor with internal LUTs for calibration with great homogeneity and extremely high color accuracy across the board like some of the NEC PA MultiSync models or Eizo ColorEdge displays.

It also means I don't plan to buy and meticulously use a color calibration device. That means I don't typically share my images with others in large lossless formats. That means I don't care to limit myself to a 1920x1080 or 2560x1440 resolution display for increased Lightroom performance.

I, on the other hand, spend my time publishing my photos online. I will knowingly compress and sacrifice a good bit of image quality to make it easier for people to load my shots in a photoset. I know people view my compressed shots on a myriad of displays and devices where photos could look slightly different than how I might have intended. I also don't quite care enough to embed file-size-increasing ICC color profiles and save different versions of my files and serve up an sRGB version or a wide-gamut version depending on the device.23 Whatever, I'm fine with that. (Okay, I do want to spend some time to research serving up wide-gamut images on my site at some point..)

So what am I looking for?

What matters for my hobbyist photography use

- 4K or 5K resolution: Yes, I know this comes at the expense of Lightroom speed but I just love having more space.

- Good color accuracy: There's a lot of ways you can define good, but for me this just means something exceeding the sRGB color space and ideally covering a solid portion of either the the DCI-P3 or Adobe RGB color spaces.24

- Sub-10ms response time for occasional gaming

- Ability to use the display with both Macs and PCs easily

What this meant was that right off the bat I was not looking for fast 144Hz gaming monitors that were not 4K, had a questionable physical appearance geared towards gamers and subpar color accuracy. Which is great because I won't have to describe Nvidia G-Sync and AMD FreeSync adaptive sync technologies meant to reduce screen tearing while gaming.

At first I did a lot of searching for a 5K display (did you know Dell has an 8K monitor out now too?). I was going to breakdown a list of all the current 5K monitors on the market and what was not good about each of them but I'll spare you the details.. I just don't think there are any exceptional 5K displays on the market at this moment that satisfy my above criteria. I talk about this in more detail below, but this is changing and it may be a good time to wait a bit longer. For example, LG has some 21:9 5K Nano IPS displays with great color space coverage coming out this year.

We're in this weird time of a transition away from DisplayPort 1.2 to 1.3/1.4 so if you want to run 5K at 60Hz on a PC now, you're likely going to need to use two cables. And then there's the mixed bag of trying to output video via Thunderbolt on a PC for certain displays. For example, if you want to run the LG Ultrafine 5K monitor (the one made for Macs) at 5K 60Hz as intended, you have to use a very particular motherboard and PCIe combination to passthrough the graphics to a valid Thunderbolt 3 signal. It's all just a hassle right now. I'll wait.

I got a cheap Dell 4K.. and I didn't like it.